The Unwelcome Revival of ‘Race Science’

One of the strangest ironies of our time is that a body of thoroughly debunked “science” is being revived by people who claim to be defending truth against a rising tide of ignorance. The idea that certain races are inherently more intelligent than others is being trumpeted by a small group of anthropologists, IQ researchers, psychologists and pundits who portray themselves as noble dissidents, standing up for inconvenient facts. Through a surprising mix of fringe and mainstream media sources, these ideas are reaching a new audience, which regards them as proof of the superiority of certain races.

The claim that there is a link between race and intelligence is the main tenet of what is known as “race science” or, in many cases, “scientific racism”. Race scientists claim there are evolutionary bases for disparities in social outcomes – such as life expectancy, educational attainment, wealth, and incarceration rates – between racial groups. In particular, many of them argue that black people fare worse than white people because they tend to be less naturally intelligent.

Although race science has been repeatedly debunked by scholarly research, in recent years it has made a comeback. Many of the keenest promoters of race science today are stars of the “alt-right”, who like to use pseudoscience to lend intellectual justification to ethno-nationalist politics. If you believe that poor people are poor because they are inherently less intelligent, then it is easy to leap to the conclusion that liberal remedies, such as affirmative action or foreign aid, are doomed to fail.

There are scores of recent examples of rightwingers banging the drum for race science. In July 2016, for example, Steve Bannon, who was then Breitbart boss and would go on to be Donald Trump’s chief strategist, wrote an article in which he suggested that some black people who had been shot by the police might have deserved it. “There are, after all, in this world, some people who are naturally aggressive and violent,” Bannon wrote, evoking one of scientific racism’s ugliest contentions: that black people are more genetically predisposed to violence than others.

One of the people behind the revival of race science was, not long ago, a mainstream figure. In 2014, Nicholas Wade, a former New York Times science correspondent, wrote what must rank as the most toxic book on race science to appear in the last 20 years. In A Troublesome Inheritance, he repeated three race-science shibboleths: that the notion of “race” corresponds to profound biological differences among groups of humans; that human brains evolved differently from race to race; and that this is supported by different racial averages in IQ scores.

Wade’s book prompted 139 of the world’s leading population geneticists and evolutionary theorists to sign a letter in the New York Times accusing Wade of misappropriating research from their field, and several academics offered more detailed critiques. The University of Chicago geneticist Jerry Coyne described it as “simply bad science”. Yet some on the right have, perhaps unsurprisingly, latched on to Wade’s ideas, rebranding him as a paragon of intellectual honesty who had been silenced not by experts, but by political correctness.

“That attack on my book was purely political,” Wade told Stefan Molyneux, one of the most popular promoters of the alt-right’s new scientific racism. They were speaking a month after Trump’s election on Molyneux’s YouTube show, whose episodes have been viewed tens of millions of times. Wade continued: “It had no scientific basis whatever and it showed the more ridiculous side of this herd belief.”

Another of Molyneux’s recent guests was the political scientist Charles Murray, who co-authored The Bell Curve. The book argued that poor people, and particularly poor black people, were inherently less intelligent than white or Asian people. When it was first published in 1994, it became a New York Times bestseller, but over the next few years it was picked to pieces by academic critics.

As a frequent target for protest on college campuses, Murray has become a figurehead for conservatives who want to portray progressives as unthinking hypocrites who have abandoned the principles of open discourse that underwrite a liberal society. And this logic has prompted some mainstream cultural figures to embrace Murray as an icon of scientific debate, or at least as an emblem of their own openness to the possibility that the truth can, at times, be uncomfortable. Last April, Murray appeared on the podcast of the popular nonfiction author Sam Harris. Murray used the platform to claim his liberal academic critics “lied without any apparent shadow of guilt because, I guess, in their own minds, they thought they were doing the Lord’s work.” (The podcast episode was entitled “Forbidden knowledge”.)

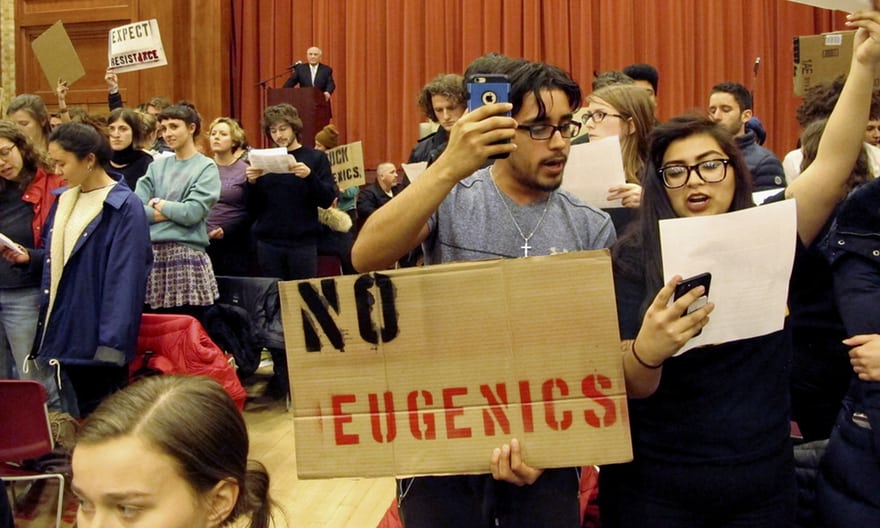

Students in Vermont turn their backs to Charles Murray during a lecture in March last year. Photograph: Lisa Rathke/AP

In the past, race science has shaped not only political discourse, but also public policy. The year after The Bell Curve was published, in the lead-up to a Republican congress slashing benefits for poorer Americans, Murray gave expert testimony before a Senate committee on welfare reform; more recently, congressman Paul Ryan, who helped push the Republicans’ latest tax cuts for the wealthy, has claimed Murray as an expert on poverty.

Now, as race science leaches back into mainstream discourse, it has also been mainlined into the upper echelons of the US government through figures such as Bannon. The UK has not been spared this revival: the London Student newspaper recently exposed a semi-clandestine conference on intelligence and genetics held for the last three years at UCL without the university’s knowledge. One of the participants was the 88-year-old Ulster-based evolutionary psychologist Richard Lynn, who has described himself as a “scientific racist”.

One of the reasons scientific racism hasn’t gone away is that the public hears more about the racism than it does about the science. This has left an opening for people such as Murray and Wade, in conjunction with their media boosters, to hold themselves up as humble defenders of rational enquiry. With so much focus on their apparent bias, we’ve done too little to discuss the science. Which raises the question: why, exactly, are the race scientists wrong?

Race, like intelligence, is a notoriously slippery concept. Individuals often share more genes with members of other races than with members of their own race. Indeed, many academics have argued that race is a social construct – which is not to deny that there are groups of people (“population groups”, in the scientific nomenclature) that share a high amount of genetic inheritance. Race science therefore starts out on treacherous scientific footing.

The supposed science of race is at least as old as slavery and colonialism, and it was considered conventional wisdom in many western countries until 1945. Though it was rejected by a new generation of scholars and humanists after the Holocaust, it began to bubble up again in the 1970s, and has returned to mainstream discourse every so often since then.

In 1977, during my final year in state high school in apartheid South Africa, a sociology lecturer from the local university addressed us and then took questions. He was asked whether black people were as intelligent as white people. No, he said: IQ tests show that white people are more intelligent. He was referring to a paper published in 1969 by Arthur Jensen, an American psychologist who claimed that IQ was 80% a product of our genes rather than our environments, and that the differences between black and white IQs were largely rooted in genetics.

In apartheid South Africa, the idea that each race had its own character, personality traits and intellectual potential was part of the justification for the system of white rule. The subject of race and IQ was similarly politicised in the US, where Jensen’s paper was used to oppose welfare schemes, such as the Head Start programme, which were designed to lift children out of poverty. But the paper met with an immediate and overwhelmingly negative reaction – “an international firestorm,” the New York Times called it 43 years later, in Jensen’s obituary – especially on American university campuses, where academics issued dozens of rebuttals, and students burned him in effigy.

The recent revival of ideas about race and IQ began with a seemingly benign scientific observation. In 2005, Steven Pinker, one of the world’s most prominent evolutionary psychologists, began promoting the view that Ashkenazi Jews are innately particularly intelligent – first in a lecture to a Jewish studies institute, then in a lengthy article in the liberal American magazine The New Republic the following year. This claim has long been the smiling face of race science; if it is true that Jews are naturally more intelligent, then it’s only logical to say that others are naturally less so.

The background to Pinker’s essay was a 2005 paper entitled “Natural history of Ashkenazi intelligence”, written by a trio of anthropologists at the University of Utah. In their 2005 paper, the anthropologists argued that high IQ scores among Ashkenazi Jews indicated that they evolved to be smarter than anyone else (including other groups of Jews).

This evolutionary development supposedly took root between 800 and 1650 AD, when Ashkenazis, who primarily lived in Europe, were pushed by antisemitism into money-lending, which was stigmatised among Christians. This rapid evolution was possible, the paper argued, in part because the practice of not marrying outside the Jewish community meant a “very low inward gene flow”. This was also a factor behind the disproportionate prevalence in Ashkenazi Jews of genetic diseases such as Tay-Sachs and Gaucher’s, which the researchers claimed were a byproduct of natural selection for higher intelligence; those carrying the gene variants, or alleles, for these diseases were said to be smarter than the rest.

Pinker followed this logic in his New Republic article, and elsewhere described the Ashkenazi paper as “thorough and well-argued”. He went on to castigate those who doubted the scientific value of talking about genetic differences between races, and claimed that “personality traits are measurable, heritable within a group and slightly different, on average, between groups”.

In subsequent years, Nicholas Wade, Charles Murray, Richard Lynn, the increasingly popular Canadian psychologist Jordan Peterson and others have all piled in on the Jewish intelligence thesis, using it as ballast for their views that different population groups inherit different mental capacities. Another member of this chorus is the journalist Andrew Sullivan, who was one of the loudest cheerleaders for The Bell Curve in 1994, featuring it prominently in The New Republic, which he edited at the time. He returned to the fray in 2011, using his popular blog, The Dish, to promote the view that population groups had different innate potentials when it came to intelligence.

Sullivan noted that the differences between Ashkenazi and Sephardic Jews were “striking in the data”. It was a prime example of the rhetoric of race science, whose proponents love to claim that they are honouring the data, not political commitments. The far right has even rebranded race science with an alternative name that sounds like it was taken straight from the pages of a university course catalogue: “human biodiversity”.

A common theme in the rhetoric of race science is that its opponents are guilty of wishful thinking about the nature of human equality. “The IQ literature reveals that which no one would want to be the case,” Peterson told Molyneux on his YouTube show recently. Even the prominent social scientist Jonathan Haidt has criticised liberals as “IQ deniers”, who reject the truth of inherited IQ difference between groups because of a misguided commitment to the idea that social outcomes depend entirely on nurture, and are therefore mutable.

Defenders of race science claim they are simply describing the facts as they are – and the truth isn’t always comfortable. “We remain the same species, just as a poodle and a beagle are of the same species,” Sullivan wrote in 2013. “But poodles, in general, are smarter than beagles, and beagles have a much better sense of smell.”

The race “science” that has re-emerged into public discourse today – whether in the form of outright racism against black people, or supposedly friendlier claims of Ashkenazis’ superior intelligence – usually involves at least one of three claims, each of which has no grounding in scientific fact.

The first claim is that when white Europeans’ Cro-Magnon ancestors arrived on the continent 45,000 years ago, they faced more trying conditions than in Africa. Greater environmental challenges led to the evolution of higher intelligence. Faced with the icy climate of the north, Richard Lynn wrote in 2006, “less intelligent individuals and tribes would have died out, leaving as survivors the more intelligent”.

Set aside for a moment the fact that agriculture, towns and alphabets first emerged in Mesopotamia, a region not known for its cold spells. There is ample scientific evidence of modern intelligence in prehistoric sub-Saharan Africa. In the past 15 years, cave finds along the South African Indian Ocean coastline have shown that, between 70,000 and 100,000 years ago, biologically modern humans were carefully blending paint by mixing ochre with bone-marrow fat and charcoal, fashioning beads for self-adornment, and making fish hooks, arrows and other sophisticated tools, sometimes by heating them to 315C (600F). Those studying the evidence, such as the South African archaeologist Christopher Henshilwood, argue that these were intelligent, creative people – just like us. As he put it: “We’re pushing back the date of symbolic thinking in modern humans – far, far back.”

A 77,000-year-old piece of red ochre with a deliberately engraved design discovered at Blombos Cave, South Africa. Photograph: Anna Zieminski/AFP/Getty Images

A second plank of the race science case goes like this: human bodies continued to evolve, at least until recently – with different groups developing different skin colours, predispositions to certain diseases, and things such as lactose tolerance. So why wouldn’t human brains continue evolving, too?

The problem here is that race scientists are not comparing like with like. Most of these physical changes involve single gene mutations, which can spread throughout a population in a relatively short span of evolutionary time. By contrast, intelligence – even the rather specific version measured by IQ – involves a network of potentially thousands of genes, which probably takes at least 100 millennia to evolve appreciably.

Given that so many genes, operating in different parts of the brain, contribute in some way to intelligence, it is hardly surprising that there is scant evidence of cognitive advance, at least over the last 100,000 years. The American palaeoanthropologist Ian Tattersall, widely acknowledged as one of the world’s leading experts on Cro-Magnons, has said that long before humans left Africa for Asia and Europe, they had already reached the end of the evolutionary line in terms of brain power. “We don’t have the right conditions for any meaningful biological evolution of the species,” he told an interviewer in 2000.

In fact, when it comes to potential differences in intelligence between groups, one of the remarkable dimensions of the human genome is how little genetic variation there is. DNA research conducted in 1987 suggested a common, African ancestor for all humans alive today: “mitochondrial Eve”, who lived around 200,000 years ago. Because of this relatively recent (in evolutionary terms) common ancestry, human beings share a remarkably high proportion of their genes compared to other mammals. The single subspecies of chimpanzee that lives in central Africa, for example, has significantly more genetic variation than does the entire human race.

No one has successfully isolated any genes “for” intelligence at all, and claims in this direction have turned to dust when subjected to peer review. As the Edinburgh University cognitive ageing specialist Prof Ian Deary put it, “It is difficult to name even one gene that is reliably associated with normal intelligence in young, healthy adults.” Intelligence doesn’t come neatly packaged and labelled on any single strand of DNA.

Ultimately, race science depends on a third claim: that different IQ averages between population groups have a genetic basis. If this case falls, the whole edifice – from Ashkenazi exceptionalism to the supposed inevitability of black poverty – collapses with it.

Before we can properly assess these claims, it is worth looking at the history of IQ testing. The public perception of IQ tests is that they provide a measure of unchanging intelligence, but when we look deeper, a very different picture emerges. Alfred Binet, the modest Frenchman who invented IQ testing in 1904, knew that intelligence was too complex to be expressed in a single number. “Intellectual qualities … cannot be measured as linear surfaces are measured,” he insisted, adding that giving IQ too much significance “may give place to illusions.”

But Binet’s tests were embraced by Americans who assumed IQ was innate, and used it to inform immigration, segregationist and eugenic policies. Early IQ tests were packed with culturally loaded questions. (“The number of a Kaffir’s legs is: 2, 4, 6, 8?” was one of the questions in IQ tests given to US soldiers during the first world war.) Over time, the tests became less skewed and began to prove useful in measuring some forms of mental aptitude. But this tells us nothing about whether scores are mainly the product of genes or of environment. Further information is needed.

One way to test this hypothesis would be to see if you can increase IQ by learning. If so, this would show that education levels, which are purely environmental, affect the scores. It is now well-known that if you practise IQ tests your score will rise, but other forms of study can also help. In 2008, Swiss researchers recruited 70 students and had half of them practise a memory-based computer game. All 35 of these students saw their IQs increase, and those who practised daily, for the full 19 weeks of the trial, showed the most improvement.

Another way to establish the extent to which IQ is determined by nature rather than nurture would be to find identical twins separated at birth and subsequently raised in very different circumstances. But such cases are unusual, and some of the most influential research – such as the work of the 20th-century English psychologist Cyril Burt, who claimed to have shown that IQ was innate – has been dubious. (After Burt’s death, it was revealed that he had falsified much of his data.)

A genuine twin study was launched by the Minneapolis-based psychologist Thomas Bouchard in 1979, and although he was generously backed by the overtly racist Pioneer Fund, his results make interesting reading. He studied identical twins, who have the same genes, but who were separated close to birth. This allowed him to consider the different contributions that environment and biology played in their development. His idea was that if the twins emerged with the same traits despite being raised in different environments, the main explanation would be genetic.

The problem was that most of his identical twins were adopted into the same kinds of middle-class families. So it was hardly surprising that they ended up with similar IQs. In the relatively few cases where twins were adopted into families of different social classes and education levels, there ended up being huge disparities in IQ – in one case a 20-point gap; in another, 29 points, or the difference between “dullness” and “superior intelligence” in the parlance of some IQ classifications. In other words, where the environments differed substantially, nurture seems to have been a far more powerful influence than nature on IQ.

But what happens when you move from individuals to whole populations? Could nature still have a role in influencing IQ averages? Perhaps the most significant IQ researcher of the last half century is the New Zealander Jim Flynn. IQ tests are calibrated so that the average IQ of all test subjects at any particular time is 100. In the 1990s, Flynn discovered that each generation of IQ tests had to be more challenging if this average was to be maintained. Projecting back 100 years, he found that average IQ scores measured by current standards would be about 70.

Yet people have not changed genetically since then. Instead, Flynn noted, they have become more exposed to abstract logic, which is the sliver of intelligence that IQ tests measure. Some populations are more exposed to abstraction than others, which is why their average IQ scores differ. Flynn found that the different averages between populations were therefore entirely environmental.

This finding has been reinforced by the changes in average IQ scores observed in some populations. The most rapid has been among Kenyan children – a rise of 26.3 points in the 14 years between 1984 and 1998, according to one study. The reason has nothing to do with genes. Instead, researchers found that, in the course of half a generation, nutrition, health and parental literacy had improved.

So, what about the Ashkenazis? Since the 2005 University of Utah paper was published, DNA research by other scientists has shown that Ashkenazi Jews are far less genetically isolated than the paper argued. On the claims that Ashkenazi diseases were caused by rapid natural selection, further research has shown that they were caused by a random mutation. And there is no evidence that those carrying the gene variants for these diseases are any more or less intelligent than the rest of the community.

But it was on IQ that the paper’s case really floundered. Tests conducted in the first two decades of the 20th century routinely showed Ashkenazi Jewish Americans scoring below average. For example, the IQ tests conducted on American soldiers during the first world war found Nordics scoring well above Jews. Carl Brigham, the Princeton professor who analysed the exam data, wrote: “Our figures … would rather tend to disprove the popular belief that the Jew is highly intelligent”. And yet, by the second world war, Jewish IQ scores were above average.

A similar pattern could be seen from studies of two generations of Mizrahi Jewish children in Israel: the older generation had a mean IQ of 92.8, the younger of 101.3. And it wasn’t just a Jewish thing. Chinese Americans recorded average IQ scores of 97 in 1948, and 108.6 in 1990. And the gap between African Americans and white Americans narrowed by 5.5 points between 1972 and 2002.

No one could reasonably claim that there had been genetic changes in the Jewish, Chinese American or African American populations in a generation or two. After reading the University of Utah paper, Harry Ostrer, who headed New York University’s human genetics programme, took the opposite view to Steven Pinker: “It’s bad science – not because it’s provocative, but because it’s bad genetics and bad epidemiology.”

Ten years ago, our grasp of the actual science was firm enough for Craig Venter, the American biologist who led the private effort to decode the human genome, to respond to claims of a link between race and intelligence by declaring: “There is no basis in scientific fact or in the human genetic code for the notion that skin colour will be predictive of intelligence.”

Yet race science maintains its hold on the imagination of the right, and today’s rightwing activists have learned some important lessons from past controversies. Using YouTube in particular, they attack the left-liberal media and academic establishment for its unwillingness to engage with the “facts”, and then employ race science as a political battering ram to push forward their small-state, anti-welfare, anti-foreign-aid agenda.

These political goals have become ever more explicit. When interviewing Nicholas Wade, Stefan Molyneux argued that different social outcomes were the result of different innate IQs among the races – as he put it, high-IQ Ashkenazi Jews and low-IQ black people. Wade agreed, saying that the “role played by prejudice” in shaping black people’s social outcomes “is small and diminishing”, before condemning “wasted foreign aid” for African countries.

Similarly, when Sam Harris, in his podcast interview with Charles Murray, pointed out the troubling fact that The Bell Curve was beloved by white supremacists and asked what the purpose of exploring race-based differences in intelligence was, Murray didn’t miss a beat. Its use, Murray said, came in countering policies, such as affirmative action in education and employment, based on the premise that “everybody is equal above the neck … whether it’s men or women or whether it’s ethnicities”.

Race science isn’t going away any time soon. Its claims can only be countered by the slow, deliberate work of science and education. And they need to be – not only because of their potentially horrible human consequences, but because they are factually wrong. The problem is not, as the right would have it, that these ideas are under threat of censorship or stigmatisation because they are politically inconvenient. Race science is bad science. Or rather, it is not science at all.

Gavin Evans lectures at Birkbeck College and is the author of several books, including the memoir, Dancing Shoes is Dead. He has written widely on issues of race, IQ and genetics.

Help deliver the independent journalism the world needs. Support the Guardian. Make a contribution.