Quantum Entanglement Wins 2022’s Nobel Prize in Physics

- KEY TAKEAWAYS

- For generations, scientists argued over whether there was truly an objective, predictable reality for even quantum particles, or whether quantum "weirdness" was inherent to physical systems.

- In the 1960s, John Stewart Bell developed an inequality describing the maximum possible statistical correlation between two entangled particles: Bell's inequality.

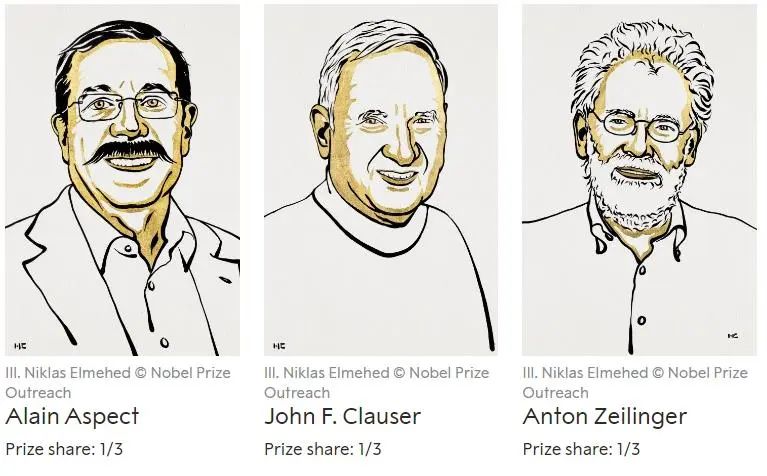

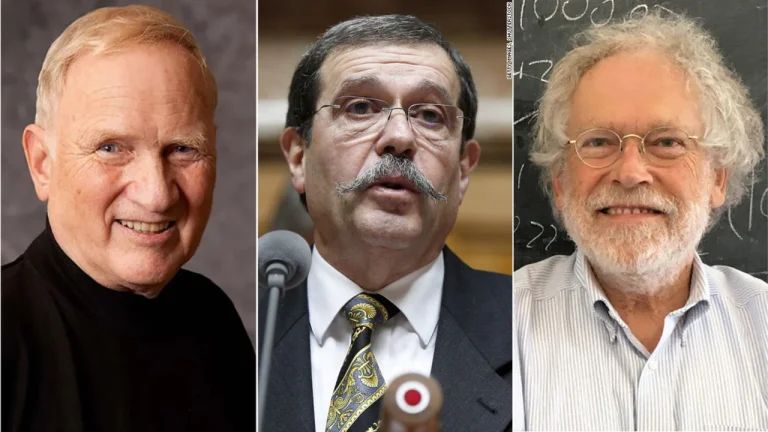

- But certain experiments could violate Bell's inequality, and these three pioneers — John Clauser, Alain Aspect, and Anton Zeilinger — helped make quantum information systems a bona fide science.

There’s a simple but profound question that physicists, despite all we’ve learned about the Universe, cannot fundamentally answer: “What is real?” We know that particles exist, and we know that particles have certain properties when you measure them. But we also know that the very act of measuring a quantum state — or even allowing two quanta to interact with one another — can fundamentally alter or determine what you measure. An objective reality, devoid of the actions of an observer, does not appear to exist in any sort of fundamental way.

But that doesn’t mean there aren’t rules that nature must obey. Those rules exist, even if they’re difficult and counterintuitive to understand. Instead of arguing over one philosophical approach versus another to uncover the true quantum nature of reality, we can turn to properly-designed experiments. Even two entangled quantum states must obey certain rules, and that’s leading to the development of quantum information sciences: an emerging field with potentially revolutionary applications. 2022’s Nobel Prize in Physics was just announced, and it’s awarded to John Clauser, Alain Aspect, and Anton Zeilinger for the pioneering development of quantum information systems, entangled photons, and the violation of Bell’s inequalities. It’s a Nobel Prize that’s long overdue, and the science behind it is particularly mind-bending.

Artwork illustrating the three winners of the 2022 Nobel Prize in Physics, for experiments with entangled particles that established Bell’s inequality violations and pioneered quantum information science. From left-to-right, the three Nobel Laureates are Alain Aspect, John Clauser, and Anton Zeilinger.

There are all sorts of experiments we can perform that illustrate the indeterminate nature of our quantum reality.

- Place a number of radioactive atoms in a container and wait a specific amount of time. You can predict, on average, how many atoms will remain versus how many will have decayed, but you have no way of predicting which atoms will and won’t survive. We can only derive statistical probabilities.

- Fire a series of particles through a narrowly spaced double slit and you’ll be able to predict what sort of interference pattern will arise on the screen behind it. However, for each individual particle, even when sent through the slits one at a time, you cannot predict where it will land.

- Pass a series of particles (that possess quantum spin) through a magnetic field and half will deflect “up” while half deflect “down” along the direction of the field. If you don’t pass them through another, perpendicular magnet, they’ll maintain their spin orientation in that direction; if you do, however, their spin orientation will once again become randomized.

Certain aspects of quantum physics appear to be totally random. But are they really random, or do they only appear random because our information about these systems are limited, insufficient to reveal an underlying, deterministic reality? Ever since the dawn of quantum mechanics, physicists have argued over this, from Einstein to Bohr and beyond.

When a particle with quantum spin is passed through a directional magnet, it will split in at least 2 directions, dependent on spin orientation. If another magnet is set up in the same direction, no further split will ensue. However, if a third magnet is inserted between the two in a perpendicular direction, not only will the particles split in the new direction, but the information you had obtained about the original direction gets destroyed, leaving the particles to split again when they pass through the final magnet. (Credit: MJasK/Wikimedia Commons)

But in physics, we don’t decide matters based on arguments, but rather on experiments. If we can write down the laws that govern reality — and we have a pretty good idea of how to do that for quantum systems — then we can derive the expected, probabilistic behavior of the system. Given a good enough measurement setup and apparatus, we can then test our predictions experimentally, and draw conclusions based on what we observe.

And if we’re clever, we could even potentially design an experiment that could test some extremely deep ideas about reality, such as whether there’s a fundamental indeterminism to the nature of quantum systems until the moment they are measured, or whether there’s some type of “hidden variable” underlying our reality that pre-determines what the outcome is going to be, even before we measure it.

One special type of quantum system that’s led to a great many key insights concerning this question is relatively simple: an entangled quantum system. All you need to do is create an entangled pair of particles, where the quantum state of one particle is correlated with the quantum state of another. Although, individually, both have completely random, indeterminate quantum states, there should be correlations between the properties of both quanta when taken together.

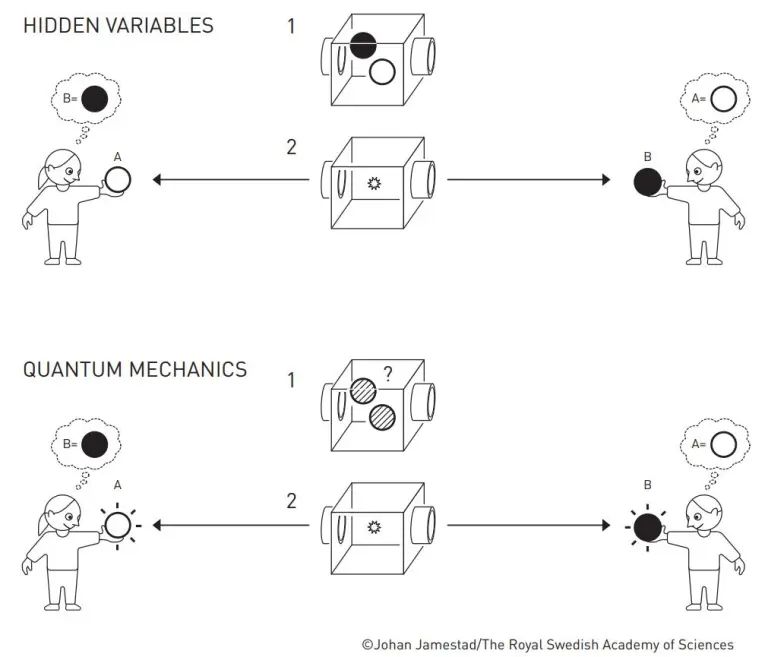

Quantum mechanics’ entangled pairs can be compared to a machine that throws out balls of opposite colours in opposite directions. When Bob catches a ball and sees that it is black, he immediately knows that Alice has caught a white one. In a theory that uses hidden variables, the balls had always contained hidden information about what colour to show. However, quantum mechanics says that the balls were grey until someone looked at them, when one randomly turned white and the other black. Bell inequalities show that there are experiments that can differentiate between these cases. Such experiments have proven that quantum mechanics’ description is correct. (Credit: Johan Jamestad/The Royal Swedish Academy of Sciences)

Even at the outset, this seems weird, even for quantum mechanics. It’s generally said that there’s a speed limit to how quickly any signal — including any type of information — can travel: at the speed of light. But if you:

- create an entangled pair of particles,

- and then separate them by a very large distance,

- and then measure the quantum state of one of them,

- the quantum state of the other one is all-of-a-sudden determined,

- not at the speed of light, but rather instantaneously.

This has now been demonstrated across distances of hundreds of kilometers (or miles) over time intervals of under 100 nanoseconds. If information is being transmitted between these two entangled particles, it’s being exchanged at speeds at least thousands of times faster-than-light.

It isn’t as simple, however, as you might think. If one of the particles is measured to be “spin up,” for example, that doesn’t mean the other will be “spin down” 100% of the time. Rather, it means that the likelihood that the other is either “spin up” or “spin down” can be predicted with some statistical degree of accuracy: more than 50%, but less than 100%, depending on the setup of your experiment. The specifics of this property were derived in the 1960s by John Stewart Bell, whose Bell’s inequality ensures that the correlations between the measured states of two entangled particles could never exceed a certain value.

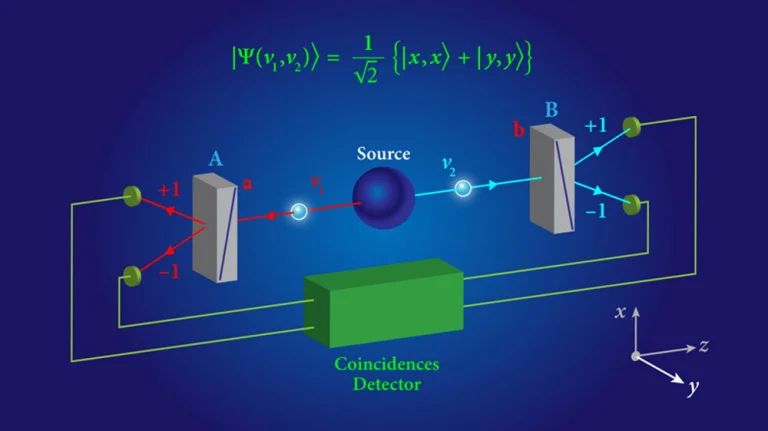

By having a source emit a pair of entangled photons, each of which winds up in the hands of two separate observers, independent measurements of the photons can be performed. The results should be random, but aggregate results should display correlations. Whether those correlations are limited by local realism or not depends on whether they obey or violate Bell’s inequality. (Credit: APS/Alan Stonebreaker)

Or rather, that the measured correlations between these entangled states would never exceed a certain value if there are hidden variables present, but that standard quantum mechanics — without hidden variables — would necessarily violate Bell’s inequality, resulting in stronger correlations than expected, under the right experimental circumstances. Bell predicted this, but the way he predicted it was, unfortunately, untestable.

And that’s where the tremendous advances by this year’s Nobel Laureates in physics comes in.

First was the work of John Clauser. The type of work that Clauser did is the kind that theoretical physicists often greatly underappreciate: he took Bell’s profound, technically correct, but impractical works and developed them so that a practical experiment that tested them could be constructed. He’s the “C” behind what’s now known as the CHSH inequality: where each member of an entangled pair of particles are in the hands of an observer who has a choice to measure the spin of their particles in one of two perpendicular directions. If reality exists independent of the observer, then each individual measurement must obey the inequality; if it doesn’t, à la standard quantum mechanics, the inequality can be violated.

The experimentally measured ratio R(ϕ)/R_0 as a function of the angle ϕ between the axes of the polarizers. The solid line is not a fit to the data points, but rather the polarization correlation predicted by quantum mechanics; it just so happens that the data agrees with theoretical predictions to an alarming precision, and one that cannot be explained by local, real correlations between the two photons. (Credit: S. Freedman, PhD Thesis/LBNL, 1972)

Clauser not only derived the inequality in such a way that it could be tested, but he designed and performed the critical experiment himself, along with then-PhD-student Stuart Freedman, determining that it did, in fact, violate Bell’s (and the CHSH) inequality. Local hidden variable theories, all of a sudden, were shown to conflict with the quantum reality of our Universe: a Nobel-worthy achievement indeed!

But, as in all things, the conclusions we can draw from the results of this experiment are only as good as the assumptions that underlie the experiment itself. Was Clauser’s work loophole-free, or could there be some special type of hidden variable that could still be consistent with his measured results?

That’s where the work of Alain Aspect, the second of this year’s Nobel Laureates, comes in. Aspect realized that, if the two observers were in causal contact with one another — that is, if one of them could send a message to the other at the speed of light about their experimental results, and that result could be received before the other observer measured their result — then one observer’s choice of measurement could influence the other’s. This was the loophole that Aspect meant to close.

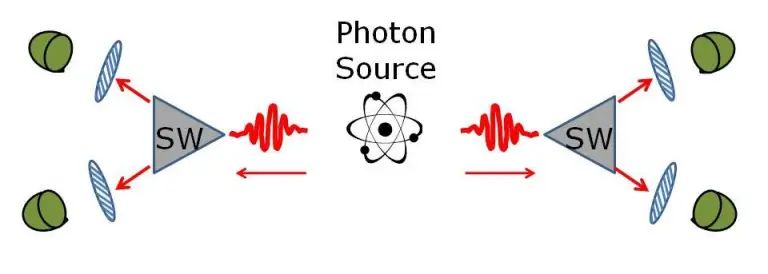

Schematic of the third Aspect experiment testing quantum non-locality. Entangled photons from the source are sent to two fast switches that direct them to polarizing detectors. The switches change settings very rapidly, effectively changing the detector settings for the experiment while the photons are in flight. (Credit: Chad Orzel)

In the early 1980s, along with collaborators Phillipe Grangier, Gérard Roger and Jean Dalibard, Aspect performed a series of profound experiments that greatly improved upon Clauser’s work on a number of fronts.

- He established a violation of Bell’s inequality to much greater significance: by 30+ standard deviations, as opposed to Clauser’s ~6.

- He established a greater magnitude violation of Bell’s inequality — 83% of the theoretical maximum, as opposed to no greater than 55% of the maximum in prior experiments — than ever before.

- And, by rapidly and continuously randomizing which polarizer’s orientation would be experienced by each photon used in his setup, he ensured that any “stealth communication” between the two observers would have to occur at speeds significantly in excess of the speed of light, closing the critical loophole.

That last feat was the most significant, with the critical experiment now widely known as the third Aspect experiment. If Aspect had done nothing else, the ability to demonstrate the inconsistency of quantum mechanics with local, real hidden variables was a profound, Nobel-worthy advance all on its own.

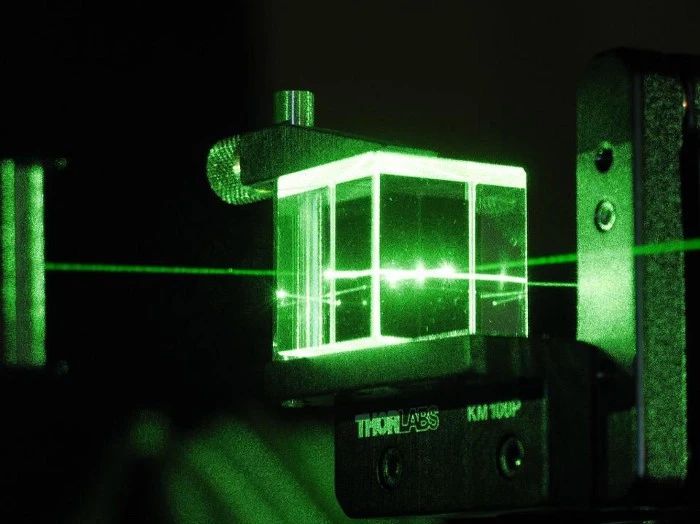

By creating two entangled photons from a pre-existing system and separating them by great distances, we can observe what correlations they display between them, even from extraordinarily different locations. Interpretations of quantum physics that demand both locality and realism cannot account for a myriad of observations, but multiple interpretations consistent with standard quantum mechanics all appear to be equally good. (Credit: Melissa Meister/ThorLabs)

But still, some physicists wanted more. After all, were the polarization settings truly being determined randomly, or could the settings be only pseudorandom: where some unseen signal, perhaps traveling at light speed or slower, be transmitted between the two observers, explaining the correlations between them?

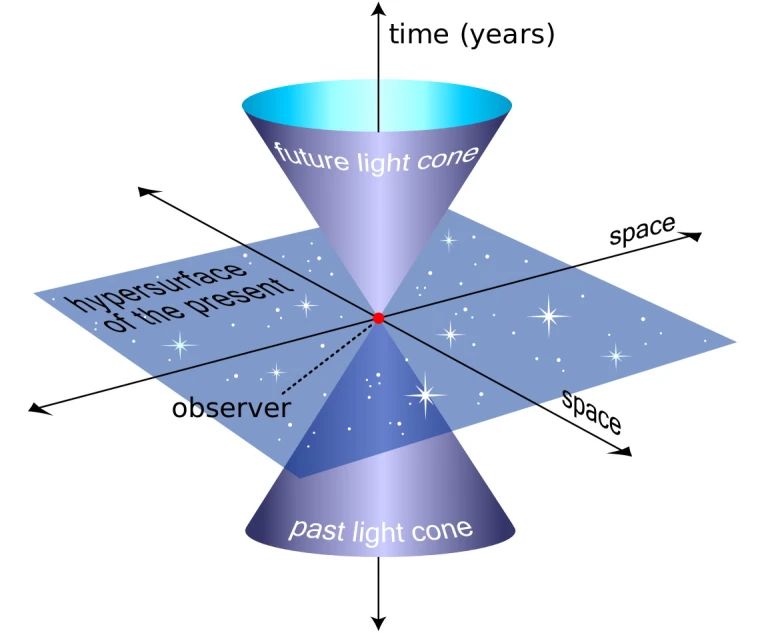

The only way to truly close that latter loophole would be to create two entangled particles, separate them by a very large distance while still maintaining their entanglement, and then perform the critical measurements as close to simultaneously as possible, ensuring that the two measurements were literally outside of the light-cones of each individual observer.

Only if each observer’s measurements can be established to be truly independent of one another — with no hope of communication between them, even if you cannot see or measure the hypothetical signal they’d exchange between them — can you truly assert that you’ve closed the final loophole on local, real hidden variables. The very heart of quantum mechanics is at stake, and that’s where the work of the third of this year’s crop of Nobel Laureates, Anton Zeilinger, comes into play.

An example of a light cone, the three-dimensional surface of all possible light rays arriving at and departing from a point in spacetime. The more you move through space, the less you move through time, and vice versa. Only things contained within your past light-cone can affect you today; only things contained within your future light-cone can be perceived by you in the future. Two events outside of one another’s light-cone cannot exchange communications under the laws of special relativity. (Credit: MissMJ/Wikimedia Commons)

The way Zeilinger and his team of collaborators accomplished this was nothing short of brilliant, and by brilliant, I mean simultaneously imaginative, clever, careful, and precise.

- First, they created a pair of entangled photons by pumping a down-conversion crystal with laser light.

- Then, they sent each member of the photon pair through a separate optical fiber, preserving the entangled quantum state.

- Next, they separated the two photons by a large distance: initially by about 400 meters, so that the light-travel time between them would be longer than a microsecond.

- And finally, they performed the critical measurement, with the timing difference between each measurement on the order of tens of nanoseconds.

They performed this experiment more than 10,000 times, building up statistics so robust they set a new record for significance, while closing the “unseeable signal” loophole. Today, subsequent experiments have extended the distance that entangled photons have been separated by before being measured to hundreds of kilometers, including an experiment with entangled pairs found both on Earth’s surface and in orbit around our planet.

Many entanglement-based quantum networks across the world, including networks extending into space, are being developed to leverage the spooky phenomena of quantum teleportation, quantum repeaters and networks, and other practical aspects of quantum entanglement. (Credit: S.A. Hamilton et al., 70th International Astronautical Congress, 2019)

Zeilinger also, perhaps even more famously, devised the critical setup that enabled one of the strangest quantum phenomena ever discovered: quantum teleportation. There’s a famous quantum no-cloning theorem, dictating that you cannot produce a copy of an arbitrary quantum state without destroying the original quantum state itself. What Zeilinger’s group, along with Francesco De Martini’s independent group, were able to experimentally demonstrate was a scheme for entanglement swapping: where one particle’s quantum state, even while entangled with another, could be effectively “moved” onto a different particle, even one that never interacted directly with the particle it’s now entangled with.

Quantum cloning is still impossible, as the original particle’s quantum properties are not preserved, but a quantum version of “cut and paste” has been definitively demonstrated: a profound and Nobel-worthy advance for certain.

John Clauser, left, Alain Aspect, center, and Anton Zeilinger, right, are 2022’s Nobel Laureates in physics for advances in the field and practical applications of quantum entanglement. This Nobel Prize has been expected for over ~20 years, and this year’s selection is very hard to argue against based on the merits of the research. (Credit: Getty Images/Shutterstock, modified by E. Siegel)

This year’s Nobel Prize isn’t simply a physical curiosity, one that’s profound for uncovering some deeper truths about the nature of our quantum reality. Yes, it does indeed do that, but there’s a practical side to it as well: one that hews to the spirit of the Nobel Prize’s commitment that it be awarded for research conducted for the betterment of humankind. Owing to the research of Clauser, Aspect, and Zeilinger, among others, we now understand that entanglement allows pairs of entangled particles to be leveraged as a quantum resource: enabling it to be used for practical applications at long last.

Quantum entanglement can be established over very large distances, enabling the possibility of quantum information being communicated over large distances. Quantum repeaters and quantum networks are now both capable of performing precisely that task. Additionally, controlled entanglement is now possible between not just two particles, but many, such as in numerous condensed matter and multi-particle systems: again agreeing with quantum mechanics’ predictions and disagreeing with hidden variable theories. And finally, secure quantum cryptography, specifically, is enabled by a Bell-inequality-violating test: again demonstrated by Zeilinger himself.

Three cheers for 2022’s Nobel Laureates in physics, John Clauser, Alain Aspect, and Anton Zeilinger! Because of them, quantum entanglement is no longer simply a theoretical curiosity, but a powerful tool being put to use on the cutting-edge of today’s technology.

Ethan Siegel a Ph.D. astrophysicist, author, and science communicator, who professes physics and astronomy at various colleges. He has won numerous awards for science writing since 2008 for his blog, Starts With A Bang, including the award for best science blog by the Institute of Physics. My two books, Treknology: The Science of Star Trek from Tricorders to Warp Drive, Beyond the Galaxy: How humanity looked beyond our Milky Way and discovered the entire Universe, are available for purchase at Amazon. Follow him on Twitter @startswithabang.

Get Big Think in Your Inbox. Join our community of more than 10 million lifelong learners and get smarter, faster today.