The Science (and Business) Behind COVID-19 Disinformation

Rumors around COVID-19 vaccines and death, like the Died Suddenly video we highlighted earlier this week, aren’t random. Disinformation campaigns are deliberate, often orchestrated, and highly effective in confusing people enough to change behaviors, like not getting the COVID-19 vaccine. And it’s a very lucrative business. Malicious rumors continue to be a massive challenge in public health, but we aren’t hopeless. There are things that can be done.

Note: Disinformation is different than misinformation. Disinformation is false information which is deliberately intended to mislead. Misinformation is misleading information without malicious intent. Both are different than healthy scientific debate.

Landscape

Rumors, stigma, and conspiracy theories related to the pandemic are everywhere. One study found that in the infancy of the pandemic (April 2020), they were present in 85 countries and in 25 different languages. The countries with the highest levels? India followed by the U.S. and China.

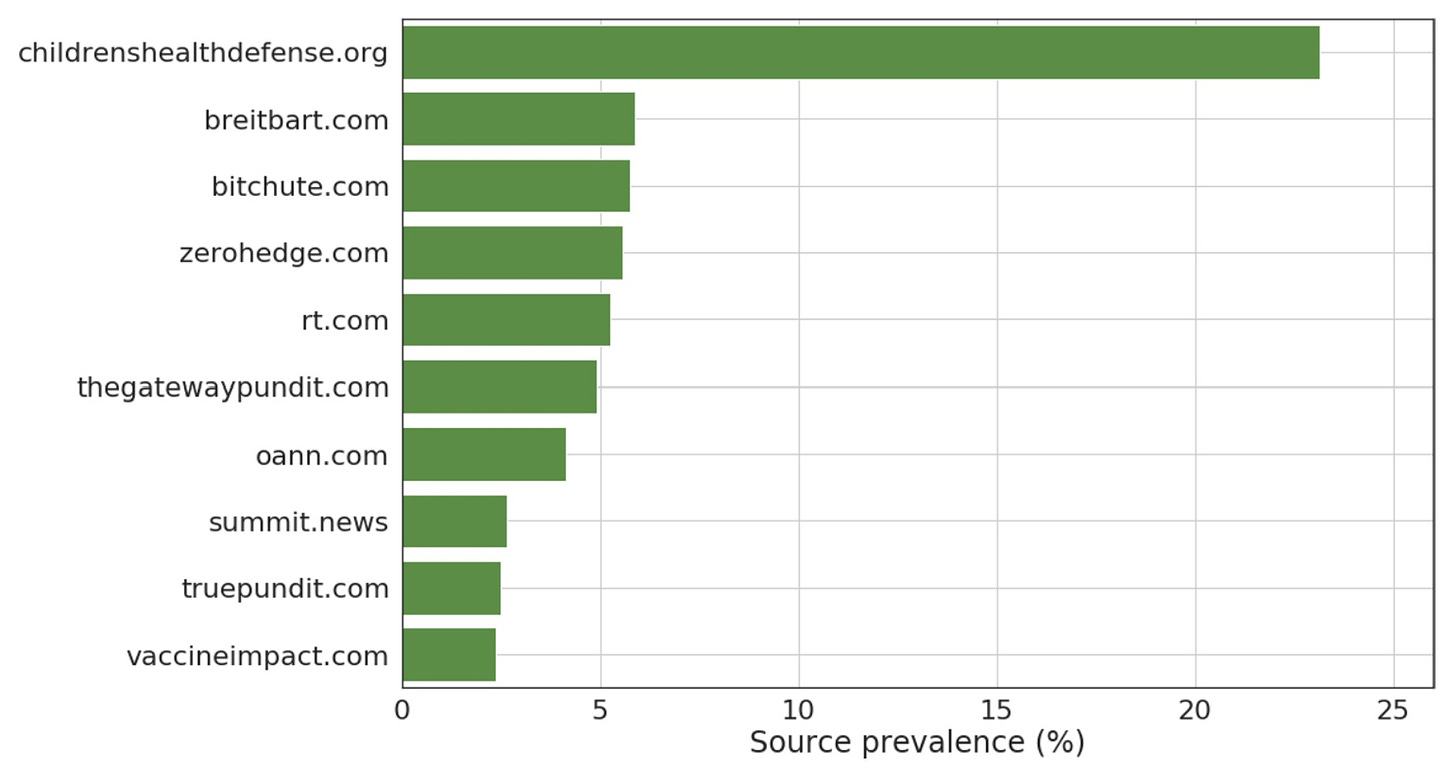

Analyses have found that 12 people—coined the “disinformation dozen”—are responsible for 65% of misleading claims, rumors, and lies about COVID-19 vaccines on social media. Their impact is most effective on Facebook (account for up to 73% of Facebook rumors), but also bleed into Instagram and Twitter. A scientific study published in Nature found that 1 in 4 anti-COVID-19 vaccine tweets originated from the so-called Children’s Health Defense—which is controlled by one man.

Top low-credibility sources. We considered tweets shared by users geolocated in the U.S. that link to a low-credibility source. Sources are ranked by percentage of the tweets considered

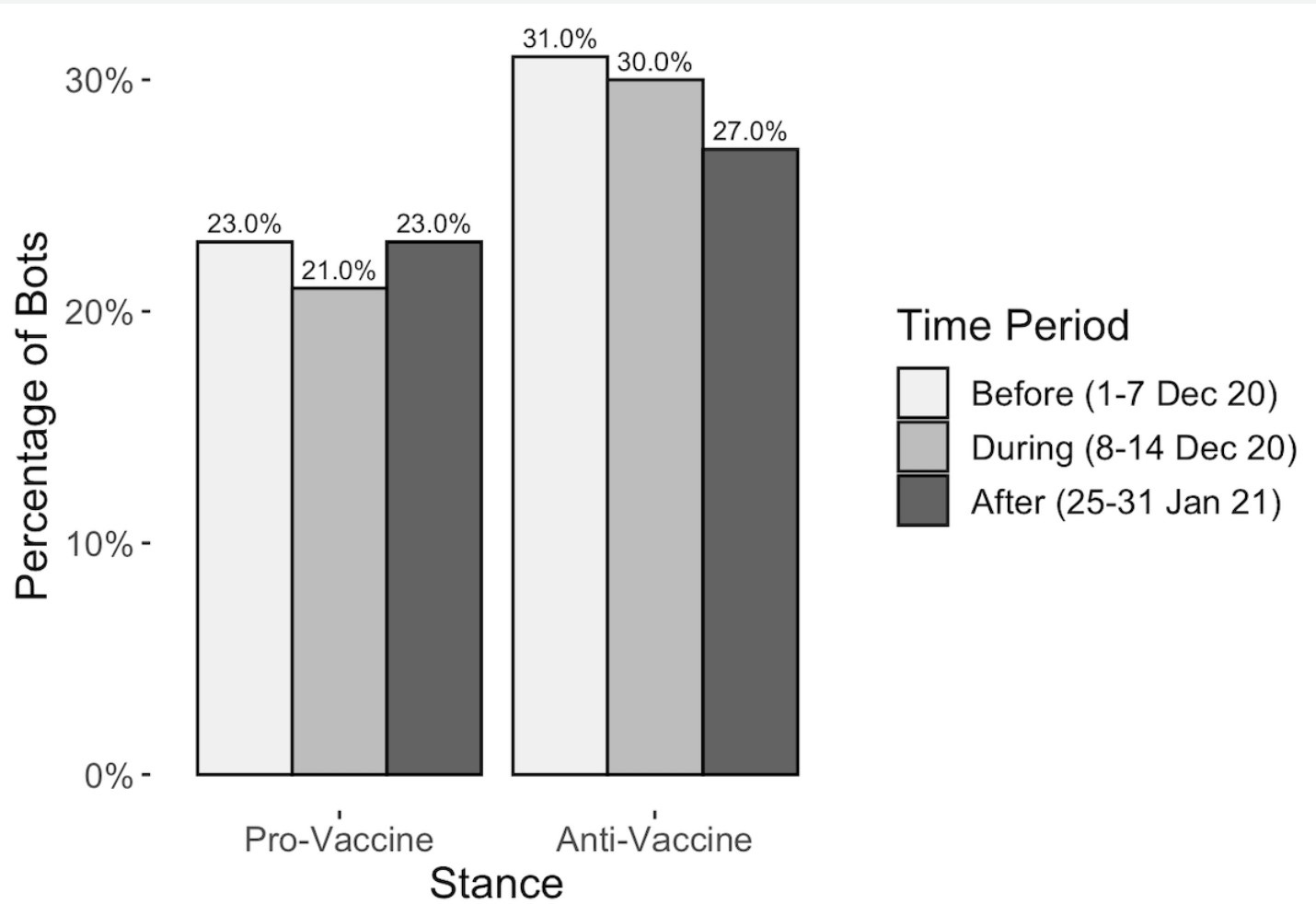

Bots — or automated accounts on social media used to spread online disinformation— have been successfully used to manipulate democracies. They are starting to be used for public health topics, too, like e-cigarettes and medications.

During the pandemic, research found accounts linking to coronavirus information from less reliable sites were more likely to be bots. Another study found that bot activity the weeks before and after the original COVID-19 vaccine roll-out were present for pro- and anti-vaccine content. However, it was particularly high for anti-COVID-19 vaccines and highest leading up to the roll-out.

Percentage of bots by stance by time period. Source: Blane et al., 2022, J Med Internet Res

As Georgetown’s Center for Security reported, amplification of disinformation campaigns will get worse with the rise of artificial intelligence and machine learning.

Why does it work so well?

False information goes farther, faster, deeper and more broadly than the truth. One study (published in 2018—before the pandemic) found false news was 6 times faster at spreading than the truth, reached far more people, and was more broadly diffused.

Slide made by Katelyn Jetelina; Data source from Voshoughi et al., 2018

Why does false news spread so quickly? There are specific tactics used to ensure that information goes viral:

-

Leverage social media. Our information ecosystem is very different than it used to be. We have social media. It's a huge part of how people get their news and the greatest source of health information worldwide.

-

Exploit information gaps quickly. “A lie can go around the world before the truth gets its pants on.” It takes an order or magnitude more effort to refute rumors than to invent them (This is called Brandolini’s Law). Filling the information void quickly is key. We saw this with the NFL injury, for example.

-

Fail to provide context. Vaccine rumors are intentionally vague. Specifics are not narrowed down; conditions that may seem the same to an untrained person, but to a medical professional are entirely distinct. (Myocarditis is not caused by a blood clot.) Different hypotheses are blended together allowing proponents to shift from one to the other when the data doesn’t turn out like they expected.

-

Kernel of truth. Almost all vaccine rumors have a kernel of truth–something that is true but then distorted, taken out of context, or exaggerated. For example, VAERS does say that more than 18,000 people have died after the vaccine. However, this is taken out of context given the surveillance system and post hoc fallacy.

-

Sowing doubts about scientific consensus. This was famously done in the 1960’s from big tobacco: companies funding sham studies. Researchers found this tactic was intentionally used with vaccines during the pandemic.

-

Exaggerate partisan grievances. Harvard identified the most common narratives of COVID-19 disinformation were “corrupt elites,” “freedom under siege,” and “health freedom.”

-

Presenting fringe views as mainstream. This was dangerously on display last Friday when the BBC invited a prominent anti-vaxxer on TV to talk about statins, but instead hijacked the conversation and pivoted to mRNA vaccines leading to death. This was dangerous because using airtime from a “legitimate” mainstream media source gives the rumors credibility. He knew it too, as he continues to tote the fact that he made it on BBC getting tens of thousands of likes.

Effective

Social media is a domain for manipulating beliefs and ideas. The danger is that it ultimately leads to real-world actions, such as not to vaccinate.

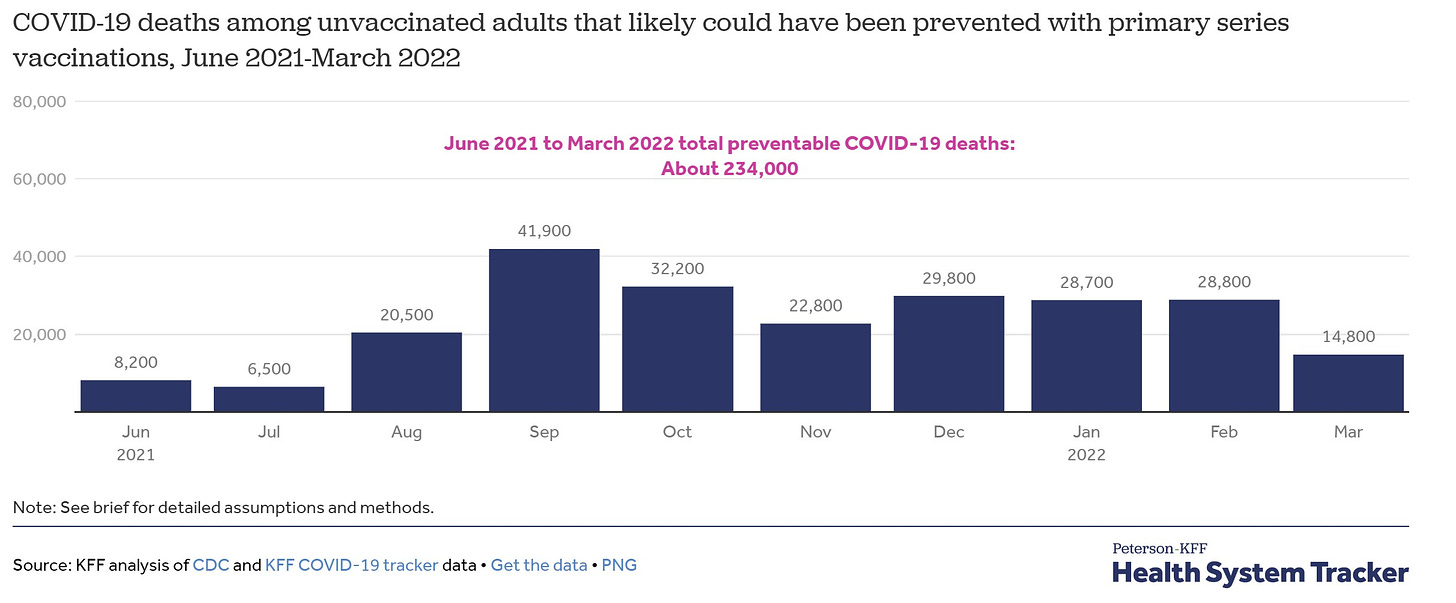

The Kaiser Family Foundation found that between June 2021 and March 2022, 234,000 deaths could have been prevented with the primary series of vaccinations.

The impact of these rumors will bleed into other vaccines. A recent MMWR paper found only 14 states have ≥95% coverage of the MMR vaccine among kindergarteners; 13 states have <90% coverage.

The danger is that infectious diseases violate the assumption of independence. One person’s actions directly impact the person next to them. We are seeing this in the Ohio measles outbreak, where some children hospitalized are only partially vaccinated because they weren’t old enough for the complete series.

Why spread rumors?

Why would people intentionally push a rumor? It’s simple: To turn a profit. Disinformation campaigns, like COVID-19 vaccines, turns out to be incredibly lucrative business model. The Center for Countering Digital Hate outlined this clearly. Some examples include:

-

Joseph Mercola uses health disinformation to promote the sale of supplements, books and food. During the height of the pandemic, he promoted a new website designed to prevent or treat COVID-19 with alternative remedies. His business has a net worth of $100 million.

-

Robert F. Kennedy Jr is the leading anti-vaxxer of the pandemic, as he owns the Children’s Health Defense. He gained more than 1 million followers in 2020 and traffic to his website rose sharply in March 2021 with 2.35 million visits.

What to do about disinformation?

Treat it like the public health problem it is: Investment, surveillance, prevention, intervention. Establish public-private partnerships. Integrate education into schools, like Finland, who has started educating their youth about disinformation.

On an individual-level, combating every rumor that pops up will be a game of whack-a-mole. Researchers have found that education about disinformation tactics makes people more likely to reject disinformation. Some examples include:

-

Games, like the GoViral, teaches people how information is manipulated.

-

Creative videos, like by Truth Labs for Education, educating on different tactics, like scapegoating:

Bottom line

Twelve people are responsible for 65% of disinformation about COVID-19 vaccines on social media. It’s coordinated, effective, lucrative, and costs lives. This is true during the pandemic and it will be true for other public health problems. It’s a public health and biosecurity threat. And we need to treat it like one.

Love, YLE

“Your Local Epidemiologist (YLE)” is written by Dr. Katelyn Jetelina, MPH PhD—an epidemiologist, data scientist, wife, and mom of two little girls. During the day she works at a nonpartisan health policy think tank and is a senior scientific consultant to a number of organizations, including the CDC. At night she writes this newsletter. Her main goal is to “translate” the ever-evolving public health science so that people will be well equipped to make evidence-based decisions. This newsletter is free thanks to the generous support of fellow YLE community members. To support this effort, subscribe.