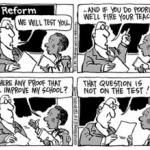

Common Core “Results” Aren’t Actually Test Scores

A few states have now released results from the Common Core standardized tests administered to students last spring. The Associated Press recently published a story about them, and over the next couple of months we can expect a flood of press releases, news articles and opinion columns bragging about the “success” of these tests.

But nearly all the news and opinion pieces will be wildly misleading. That’s because Common Core “results” aren’t actually test scores. In fact, the numbers tell us more about the states’ test scorers than they do about schoolchildren.

Consider the AP story, for example. It says that, across seven states, “overall scores higher than expected, though still below what many parents may be accustomed to seeing.” But the only things that have been released are percentages of students who supposedly meet “proficiency” levels. Those are not test scores—certainly not what parents would understand as scores. They are entirely subjective measurements.

Here’s why. When a child takes a standardized test, his or her results are turned into a “raw score,” that is, the actual number of questions answered correctly, or when an answer is worth more than one point, the actual number of points the child received. That is the only real objective “score,” and yet, Common Core raw scores have not been released.

Raw scores are adjusted—in an ideal world to account for the difficulty of questions from year to year—and converted to “scale scores.” A good way to understand those is to think of the SAT. When we say a college applicant scored a 600 on the math portion of the SAT test, we do not mean he or she got 600 answers right, we mean the raw scores were run through a formula that created a scale score—and that formula may change depending on which version of the SAT was taken. Standardized test administrators rarely publicize scale scores and the Common Core administrators have not.

Then the test administrators decide on “cut scores,” that is, the numerical levels of scale scores where a student is declared to be basic, proficient or advanced. (Here are the cut scores for the “Smarter Balanced” Common Core test. As of August, the PARCC test hadn’t set cut scores.)

Now, when a news story says that proficiency percentages were “higher than expected,” you should know what was “expected.” The Common Core consortiums gave the strong impression that they would align their levels of “proficiency” with the National Assessment of Educational Progress (NAEP) nationwide standardized test. (That is, by the way, an absurdly high standard. Diane Ravitch explains that on the NAEP, “Proficient is akin to a solid A.”)

Score setting is a subjective decision, implemented by adjusting the scale and/or cut scores. If proficiency percentages are “higher than expected,” it simply means the consortium deliberately set the scores for proficiency to make results look better than the NAEP’s. And that is all it means.

It is no different from what many states did to standardized test results in anticipation of the Common Core exams. New York intentionally lowered and subsequently increased statewide results on its standardized tests. Florida lowered passing scores on its assessment so fewer children and schools would be declared failures. The District of Columbia lowered cut scores so more students would appear to have done well. Other states did the same.

The bottom line is this: The 2015 Common Core tests simply did not and cannot measure if students did better or worse. The “Smarter Balanced” consortium (with its corporate partner McGraw-Hill), the only one to release results so far, decided to make them look better than the NAEP, but worse than prior standardized tests. The PARCC consortium (with corporate partner Pearson) is now likely to do the same. It’s fair to say the results are rigged, or as the Washington Post more gently has put it, “proficiency rates…are as much a product of policymakers’ decisions as they are of student performance.”

So in the coming weeks and months when consortium or state officials announce “proficiency” levels from Common Core tests, understand that these are simply not objective measurements of students’ learning.