In his State of the Union address, President Obama heartened many progressives with a call for raising the minimum wage to $9.00 (from its current $7.25), and then pegging its value to increases in the cost of living. This would be a bold move, and it raises an important question: What should the minimum wage be? What is the appropriate floor for the labor market?

This question was first raised a century ago in a scattering of state-level efforts. At the time, many reformers saw wage regulation as a means of pressing some workers—women, children, blacks, and immigrants—out of the labor market entirely. The goal was not so much a floor to lift wages as a door to shut out low-wage “chiselers.” In an era when most other industrial democracies were forging ahead with broader minimum-wage laws, American efforts were constrained to a few states, aimed at a few workers, and routinely disdained by the courts—which saw them as violations of the freedom to make contracts.

The economic crisis of the 1930s recast this debate. Early on, New Dealers saw the minimum wage as part of a broader push for “fair competition,” sustaining responsible employers by penalizing their low-wage competitors. The idea of calibrating the minimum to the wages of other workers was embedded in “prevailing wage” benchmarks for all government contracts. Some keyed labor standards to living standards, targeting—as FDR put it in his second inaugural address—the “one-third of a nation ill-housed, ill-clad, ill-nourished.” Some saw the minimum wage as an essential complement to the protection of labor’s rights; it was a way to “underpin the whole wage structure…. a point from which collective bargaining could take over.” And, most broadly (in an argument that resonates today), the minimum wage was pursued as a recovery strategy, using increased purchasing power to achieve renewed prosperity.

The end result, the Fair Labor Standards Act (FLSA) of 1938, banned most child labor, established a maximum workweek of 44 hours (rolled back to 40 hours in 1940), and set a minimum hourly wage of 25 cents (about $3.60/hour in inflation-adjusted, 2012 dollars). This was a modest starting point, an unhappy compromise between labor and New Deal interests looking to raise the floor and business and southern interests looking to keep it low (and full of holes). In the decades that followed, the scope and level of the minimum wage remained a political struggle. The federal minimum has been raised twenty-three times since 1938. Most of these amounted to a bump of a dime or a quarter—and never more than 70 cents in one shot.

Every legislative battle over the minimum wage has been marked by grave concerns about interfering with markets or freedom of contract, and by dire predictions (debunked here and here and here) that each increase would drive businesses into bankruptcy and workers into the soup lines. In the absence of a professional wage commission (common in other countries, including the United Kingdom), every increase had to run the gauntlet of Congress. This not only pared any increase back to what was politically feasible in any given session, but also ensured—given the long reign of “Jim Crow” southerners in Congress—that many sectors and occupations (agriculture, domestics) would be exempted from its coverage altogether.

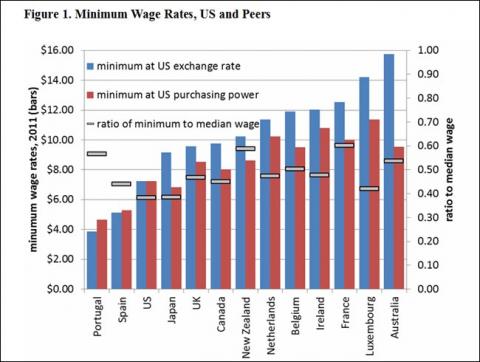

As a result, the American minimum wage hits a lower target, and covers a smaller share of its workforce, than those in most of its peer countries. Of the subset of thirteen rich OECD democracies with comparable data, all but two (Spain and Portugal) have higher minimum-wage rates (calculated at either the exchange rate or the purchasing power parity of the U.S. dollar) than the United States. On the ratio of minimum-wage rates to the median earnings of full-time workers in each country, the United States ranks dead last.

But let’s set the politics aside for the moment and imagine that the minimum wage could be linked—now and across its history—to some clear and objective criteria. We chart these in the graphic below. A first gambit, following the president’s logic, would be to use the poverty level. To stay above the 2013 federal poverty line, a full-time worker (the lowest of the reference lines on the graph below) would need a minimum wage of $9.55 for a family of three.

It is widely recognized, however, that the poverty level is an outdated and insufficient measure of family needs and expenses: it is based on spending patterns of the 1960s, it underestimates the costs of transportation and housing and health care, and it omits other expenses—most notably child care—altogether. For these reasons, the Census Bureau has begun to experiment with an alternative supplemental poverty measure. This index, as Shawn Fremstad points out, puts minimum-wage workers and their families even further behind. The supplemental poverty wage for a family of four would be about $12.87/hour. An even better yardstick would be a “living wage” that accounts for both the actual cost of living and any taxes or transfers. Such a threshold varies across and within states, but even where the cost of living is modest, it would suggest a minimum wage two or three times the current level (in Harlingen, Texas, the living wage for a family of three is $20.97; in Des Moines, Iowa, it is $25.38; in New York City, $32.30).

The second option, suggested by the second half of the president’s pitch, is that once the wage is pulled to a more respectable level, its future value be indexed to inflation. In this way the maintenance of its real value would not be dependent on the whims of Congress—which has twice in the last generation allowed a decade to pass between increases (1981-1991 and 1997-2007).

The inflation-adjusted minimum wage is shown in the above graph as the “real minimum wage” (the statutory minimum expressed in 2012 dollars). This yields the commonly cited benchmark of about $10.50 (adjusting for inflation using the basic consumer price index ), the value of the minimum at its peak in 1968. If we use the preferred CPI-U-RS index of inflation (which applies our current method for measuring price increases to earlier periods, yielding a slightly lower rate of inflation), the 2012 minimum wage would be $9.25.

The danger here, of course, is that a built-in cost-of-living adjustment would likely displace or at least delay future legislated increases. As a result, the level and impact of a higher minimum would depend greatly on the starting point. Starting north of $9.00/hour would put us about where we were in 1968. Starting any lower might condemn us to a meager minimum wage for the foreseeable future.

A better option would be to calibrate the minimum wage to productivity, or to the growth of the economy. There is a certain logic and justice to the assumption that the rewards of economic growth be shared equally. In fact, the minimum wage did keep pace with productivity from the end of the Second World War into the late 1960s, helping (alongside strong unions and other political commitments) to ensure shared prosperity during that era.

There are a few ways of imaging this link between economic growth and the minimum wage. If the minimum wage had tracked productivity growth (using the output per hour of all persons in the nonfarm business sector) since 1947, it would be at $14.73/hour today. If it had tracked productivity growth just since 1968 (a higher starting point, when the minimum’s real dollar value peaked at $9.25), the minimum wage would be $20.85 today. And even if the minimum reflected only half of the productivity gains since 1968, it would still be $15.05/hour.

The growing gap between productivity and the minimum wage (and between productivity and earnings more generally) is pretty stark. This is partly explained by the changing character of that productivity: since the 1970s, a growing share of investment has been siphoned off for depreciation (much of it to pay for the rapid turnover of computer hardware and software). As Dean Baker and Will Kimball have argued, this reduces the “usable productivity,” or the share of economic growth that might reasonably be expected to show up in paychecks. But even if we account for this, the gap still yawns: even if it were pegged to this more conservative measure of growth since 1968, the minimum wage would be $16.54/hour today.

Finally, we could tie the minimum to the wages of other workers. This, in effect, retreats from the assumption that all wages (and the minimum) should rise with productivity, suggesting instead that the minimum wage should be calibrated to the earnings of the typical worker. Unfortunately, the Bureau of Labor Statistics does not have consistent, reliable data on the wages earned by the median worker (the typical worker, who earns more than half of all workers and less than half of all workers). Instead, we use the average hourly wage of production and nonsupervisory workers, who constitute around the bottom 80 percent of the workforce.

At its inception in 1938, the minimum wage was a little more than a third of the average production wage. The 1950 increase (to $.75/hour) pulled the minimum wage to over half the average production wage—a ratio it maintained into the late 1960s. But, as noted, the value of the minimum has dropped since then. Even in an era of general wage stagnation, the minimum wage has dropped back to barely a third of the average production wage. If it had stayed at half the average production wage, it would be $9.54 today, more than $2.25/hour higher than the actual federal minimum wage.

The takeaway from all of this is simple: even the low benchmarks suggested here (one half the average production wage, the poverty level for a family of two, simply recapturing the minimum’s 1968 value) come in at more than $9.00. The benchmarks that actually sustain the value of the minimum or tie it to economic growth over time come in at close to twice that. And those that tie the minimum wage to the actual cost of living for working families run three times that or more. However bold the president’s pitch seems in the current political climate, a minimum wage of $9.00/hour is still a modest threshold by any sensible measure.

Colin Gordon is a professor of history at the University of Iowa. John Schmitt is a senior economist at the Center for Economic and Policy Research in Washington, D.C.

Spread the word