For raw calculating prowess, a run-of-the-mill computer can handily outperform the human brain. But there are a variety of tasks that the human brain—or computer systems designed to act along the same principles—can do far more accurately than a traditional computer. And there are some behaviors of neurons, like consciousness, that computerized systems have never approached.

Part of the reason is that both the architecture and behavior of neurons and transistors are radically different. It's possible to program software that incorporates neuron-like behavior, but the underlying mismatch makes the software relatively inefficient.

A team of scientists at Cornell University and IBM Research have gotten together to design a chip that's fundamentally different: an asynchronous collection of thousands of small processing cores, each capable of the erratic spikes of activity and complicated connections that are typical of neural behavior. When hosting a neural network, the chip is remarkably power efficient. And the researchers say their architecture can scale arbitrarily large, raising the prospect of a neural network supercomputer.

Computer transistors work in binary; they're either on or off, and their state can only directly influence the next transistor they're wired to. Neurons don't work like that at all. They can accept inputs from an arbitrary number of other neurons via a structure called a dendrite, and they can send signals to a large number of other neurons through structures called axons. Finally, the signals they send aren't binary; instead, they're composed of a series of "spikes" of activity, with the information contained in the frequency and timing of these spikes.

While it's possible to model this sort of behavior on a traditional computer, the researchers involved in the work argue that there's a fundamental mismatch that limits efficiency. While the connections among neurons are physically part of the computation structure in a brain, they're stored in the main memory of a computer model of the brain, which means the processor has to wait while information is retrieved any time it wants to see how a modeled neuron should behave.

Neurons in silico

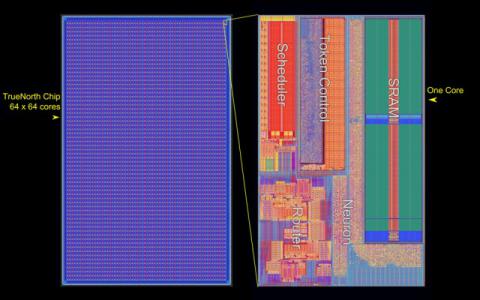

The new processor, which the team is calling TrueNorth, takes a radically different approach. Its 5.4 billion transistors include over 4,000 individual cores, each of which contain a collection of circuitry that behaves like a set of neurons. Each core has over 100,000 bits of memory, which store things like the neuron's state, the addresses of the neurons it receives signals from, and the addresses of the neurons it sends signals to. The memory also holds a value that reflects the strength of various connections, something seen in real neurons. Each core can receive input from 256 different "neurons" and can send spikes to a further 256.

The core also contains the communications hardware needed to send the spikes on to their destination. Since the whole chip is set up as a grid of neurons, addressing is as simple as providing x- and y-coordinates to get to the right core and then a neuron ID to get to the right recipient. The cores also contain random number generators to fully model the somewhat stochastic spiking activity seen in real neurons.

The authors describe the architecture's activity as follows:

When a neuron on a core spikes, it looks up in local memory an axonal delay (four bits) and the destination address (8-bit absolute address for the target axon and two 9-bit relative addresses representing core hops in each dimension to the target core). This information is encoded into a packet that is injected into the mesh, where it is handed from core to core.

In total, TrueNorth has a million programmable neurons that can establish 256 million connections among themselves. The whole thing is kept moving by a clock that operates at a leisurely 1kHz. But the communications happen asynchronously, and any core that doesn't have anything to do simply sits idle. As a result, the power density of TrueNorth is 20mW per square centimeter (a typical modern processor's figure is somewhere above 50 Watts). The chip was fabricated by Samsung using a 28nm process.

Software and performance

The problem with a radically different processing architecture is that it won't have any software available for it. However, the research team designed TrueNorth as a hardware implementation of an existing neural network software package called Compass. Thus, any problems that have been adapted to run on Compass can be run directly on TrueNorth; as the authors put it, "networks that run on TrueNorth match spike-for-spike with Compass." And there is a large software library available for Compass, including support vector machines, hidden Markov models, and more.

The researchers could also compare the performance of neural networks run on traditional hardware using Compass with the same networks running on TrueNorth. They found that TrueNorth cut energy use by 176,000-fold compared to a traditional processor and by a factor of over 700 compared to specialized hardware designed to host neural networks.

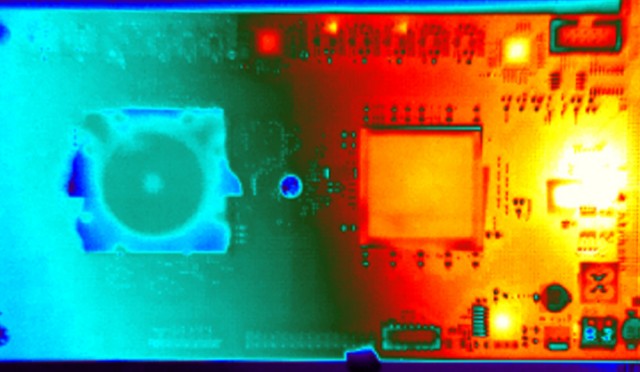

To give some sense of its actual performance, the researchers got TrueNorth to do object recognition on a 30 frames-per-second, 400×240 video feed in real time. Picking out pedestrians, bicyclists, cars, and trucks caused the processor to gobble up a grand total of 63 milliwatts.

In its current state, the authors argue that TrueNorth could make a valuable co-processor for systems that are intended to run existing neural network software. But they've also built it to scale, as its grid-like 2D mesh network is built with expansion in a third dimension in mind. "We have begun building neurosynaptic super-computers," the authors state, "by tiling multiple TrueNorth chips, creating systems with hundreds of thousands of cores, hundreds of millions of neurons, and hundreds of billion of synapses." If they have their way, we may eventually start talking of SOPS, or synaptic operations per second, instead of the traditional FLOPS.

Science, 2014. DOI: 10.1126/science.1254642 (About DOIs).

Spread the word