A few days ago, I came across an old post on the Web site Bored Panda called “10+ Times People Accidentally Found Their Doppelgängers in Museums and Couldn’t Believe Their Eyes.” The post consisted of around thirty photos of people posing with portraits whose subjects looked eerily like them. There was a white-bearded man with reading glasses hanging around his neck, the spitting image of the pike-carrying soldier in Jan van Bijlert’s “Mars Vigilant.” (“Same nose!!!!” one commenter wrote.) There was a young woman in a navy-blue shirt, a dead ringer for the girl staring forlornly in William-Adolphe Bouguereau’s “The Broken Pitcher,” also wearing navy blue. For me, the funniest thing about the photos was the backstory each one seemed to tell. I imagined van Bijlert’s Dutch warrior almost cracking a smile as his middle-aged doppelgänger twisted into position below while a friend, recruited as the photographer, gamely offered direction. “Put your arm on your waist. Tilt your chin up—higher, higher, yes!”

The goofiness of the images—in one, a man substitutes a rolled-up museum guide for a Spanish nobleman’s riding glove—punctured the hushed reverence that we tend to associate with art-consuming experiences, even in the age of #MuseumSelfie Day.

The Bored Panda post reminded me, of all things, of a classic work of Marxist art criticism: John Berger’s “Ways of Seeing,” which aired as a TV series, in 1972, and was subsequently adapted into a book. For Berger, the point of European oil paintings, in most cases, was not to edify viewers but to flatter the wealthy patrons who commissioned them. Painting was publicity, he contended, which made it akin to contemporary advertising; the nineteenth-century nude with the come-hither glance wasn’t so different from the twentieth-century pinup. Whatever the time period, he wrote, “publicity turns consumption into a substitute for democracy. The choice of what one eats (or wears or drives) takes the place of significant political choice. Publicity helps to mask and compensate for all that is undemocratic within society. And it also masks what is happening in the rest of the world.” Berger, who hated what he saw as the élitist tendency to view art museums as reliquaries for holy objects, might have relished the silly subversiveness of the art-doppelgänger trend.

If you have spent any time at all on Instagram or Facebook or Twitter in the past couple of weeks, you have seen this trend going viral. In December, Google introduced a feature to its Arts & Culture app that allows you to take a selfie with your phone and use it to generate results from the company’s image database for your own museum doppelgänger. Last week, as more and more users discovered the feature, Arts & Culture briefly became the most downloaded app in the iTunes store. Social media was flooded with algorithmically generated diptychs—smartphone selfie on the left, fine-art portrait on the right. In the many celebratory articles that followed, the project was often framed as a way to democratize art by helping normal people to see themselves in it. When Amit Sood, the president of Google Arts & Culture, discussed the app with Bloomberg Technology, on Monday, he spoke of it in downright Bergerian terms. Growing up in Mumbai, India, Sood said, he had seen art “as a posh experience, and not something that was for me or for my people.” He added, “If you want to reach people like me, or at least how I used to be before, you have to find a reason for them to want to engage.”

When I fired up the app, I was initially less interested in finding my doppelgänger than in what the result might say about the current state of facial-recognition algorithms, which are famously bad at parsing nonwhite features. My last foray into face-matching involved an app called Fetch, which purported to tell users which breed of dog they most resembled; many of my Asian friends and I were told we looked like Shih Tzus. Joy Buolamwini, a black technologist and the founder of the Algorithmic Justice League, which seeks to raise awareness of algorithmic bias, calls this phenomenon the “coded gaze.” Just as the male gaze sees the world on its own terms, as a place made for men’s pleasure, the coded gaze sees everything according to the data sets on which its creators trained it. Typically, those data sets skew white. Not long ago, Buolamwini highlighted the story of a New Zealander of Asian descent whose passport photo was rejected by government authorities because a computer thought his eyes were closed.

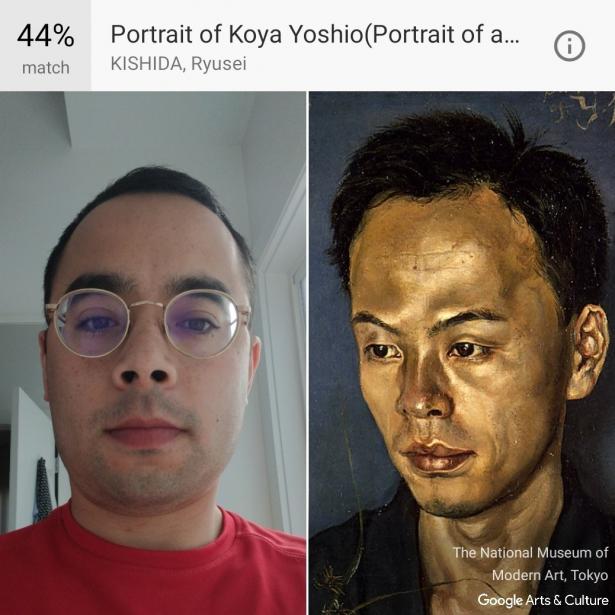

As it happened, the Arts & Culture app did better than I’d expected. The portrait I got, a painting by the twentieth-century Japanese artist Ryusei Kishida, showed a handsome, rugged-looking man named Koya Yoshio, with a tuft of brilliantly black hair. Not too shabby, I thought. Still, others had plenty of complaints. For TechCrunch, the writer Catherine Shu reported that nonwhite users were being confronted with “stereotypical tropes that white artists often resorted to when depicting people of color: slaves, servants or, in the case of many women, sexualized novelties.” In response, Google cast the blame not on its facial-analysis algorithm but on art history. The app, the company told Shu, was “limited by the images we have on our platform. Historical artworks often don’t reflect the diversity of the world. We are working hard to bring more diverse artworks online.”

I’ll leave it to computer scientists to judge Google’s algorithm. But the rise of the coded gaze has implications that go beyond the details of any one app. As my social-media timelines filled with images of my friends with their doppelgängers, I was struck more by their photos than the matches. They were often alone, poorly lit, looking straight into the camera with a blank expression. In my own match image, I have a double chin and look awkwardly at a point below the camera. Next to my washed-out face, Ryusei’s painting looks shockingly vivid, right down to the vein in his subject’s forehead. Unlike the well-composed selfies and cheerful group shots that people usually share, these images were not primarily intended for human consumption. They were meant for the machine, useful only as a collection of data points. The resulting images of photographed face next to painted face, with a percentage score indicating how good the match was, seemed vaguely diagnostic, as if the painting had materialized like the pattern of bands in a DNA test. Judging by the flattered or insulted reactions on social media, many people saw their matches as revealing something about themselves.

In one way, the art selfie app might be seen as a fulfillment of Berger’s effort to demystify the art of the past. As an alternative to museums and other institutions that reinforce old hierarchies, Berger offered the pinboard hanging on the wall of an office or living room, where people stick images that appeal to them: paintings, postcards, newspaper clippings, and other visual detritus. “On each board all the images belong to the same language and all are more or less equal within it, because they have been chosen in a highly personal way to match and express the experience of the room’s inhabitant,” Berger writes. “Logically, these boards should replace museums.” (As the critic Ben Davis has noted, today’s equivalent of the pinboard collage might be Tumblr or Instagram.)

Yet in Berger’s story this flattening represents the people prying away power from “a cultural hierarchy of relic specialists.” Google Arts & Culture is overseen by a new cadre of specialists: the programmers and technology executives responsible for the coded gaze. Today the Google Cultural Institute, which released the Arts & Culture app, boasts more than forty-five thousand art works scanned in partnership with over sixty museums. What does it mean that our cultural history, like everything else, is increasingly under the watchful eye of a giant corporation whose business model rests on data mining? One dystopian possibility offered by critics in the wake of the Google selfie app was that Google was using all of the millions of unflattering photos to train its algorithms. Google has denied this. But the training goes both ways. As Google scans and processes more of the world’s cultural artifacts, it will be easier than ever to find ourselves in history, so long as we offer ourselves up to the computer’s gaze.

Adrian Chen joined The New Yorker as a staff writer in 2016. Previously, he was a staff writer at Gawker, from 2009 to 2013. His stories on Internet culture and technology have appeared in the Times Magazine, Wired, MIT Technology Review, The Nation and New York magazine. He is a founder of IRL Club, a live event series about the Internet, and a former contributor to the Onion News Network, the Onion’s first online video series. His story for Gawker exposing a notorious Internet troll won a 2013 Mirror Award from Syracuse University’s S. I. Newhouse School of Public Communications.

Spread the word