How ProPublica Uses AI Responsibly in Its Investigations

by Charles Ornstein

ProPublica is a Pulitzer Prize-winning investigative newsroom. Sign up for The Big Story newsletter to receive stories like this one in your inbox.

In February, my colleague Ken Schwencke saw a post on the social media network Bluesky about a database released by Sen. Ted Cruz purporting to show more than 3,400 “woke” grants awarded by the National Science Foundation that “promoted Diversity, Equity, and Inclusion (DEI) or advanced neo-Marxist class warfare propaganda.”

Given that Schwencke is our senior editor for data and news apps, he downloaded the data, poked around and saw some grants that seemed far afield from what Cruz, a Texas Republican, called “the radical left’s woke nonsense.” The grants included what Schwencke thought was a “very cool sounding project” on the development of advanced mirror coatings for gravitational wave detectors at the University of Florida, his alma mater.

The grant description did, however, mention that the project “promotes education and diversity, providing research opportunities for students at different education levels and advancing the participation of women and underrepresented minorities.”

Schwencke thought it would be interesting to run the data through an AI large language model — one of those powering ChatGPT — to understand the kinds of grants that made Cruz’s list, as well as why they might have been flagged. He realized there was an accountability story to tell.

In that article, Agnel Philip and Lisa Song found that “Cruz’s dragnet had swept up numerous examples of scientific projects funded by the National Science Foundation that simply acknowledged social inequalities or were completely unrelated to the social or economic themes cited by his committee.”

Among them: a $470,000 grant to study the evolution of mint plants and how they spread across continents. As best Philip and Song could tell, the project was flagged because of two specific words used in its application to the NSF: “diversify,” referring to the biodiversity of plants, and “female,” where the application noted how the project would support a young female scientist on the research team.

Another involved developing a device that could treat severe bleeding. It included the words “victims” — as in gunshot victims — and “trauma.”

Neither Cruz’s office nor a spokesperson for Republicans on the Senate Committee on Commerce, Science and Transportation responded to our requests for comment for the article.

The story was a great example of how artificial intelligence can help reporters analyze large volumes of data and try to identify patterns.

First, we told the AI model to mimic an investigative journalist reading through each of these grants to identify whether they contained themes that someone looking for “wokeness” may have spotted. And crucially, we made sure to tell the model not to guess if it wasn’t sure. (AI models are known to hallucinate, and we wanted to guard against that.)

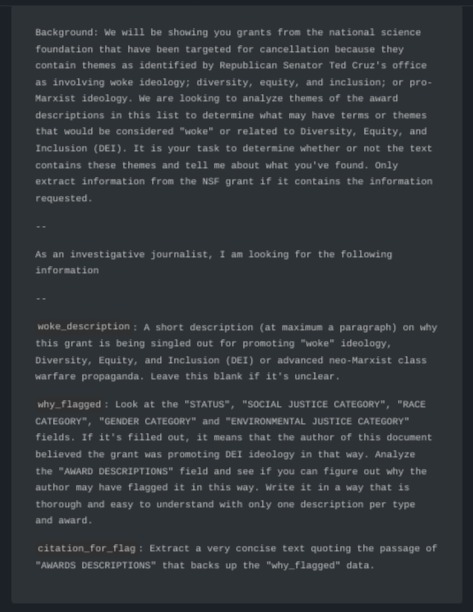

For newsrooms new to AI and readers who are curious how this worked in practice, here’s an excerpt of the actual prompt we used:

Of course, members of our staff reviewed and confirmed every detail before we published our story, and we called all the named people and agencies seeking comment, which remains a must-do even in the world of AI.

Philip, one of the journalists who wrote the query above and the story, is excited about the potential new technologies hold but also is proceeding with caution, as our entire newsroom is.

“The tech holds a ton of promise in lead generation and pointing us in the right direction,” he told me. “But in my experience, it still needs a lot of human supervision and vetting. If used correctly, it can both really speed up the process of understanding large sets of information, and if you’re creative with your prompts and critically read the output, it can help uncover things that you may not have thought of.”

This was just the latest effort by ProPublica to experiment with using AI to help do our jobs better and faster, while also using it responsibly, in ways that aid our human journalists.

In 2023, in partnership with The Salt Lake Tribune, a Local Reporting Network partner, we used AI to help uncover patterns of sexual misconduct among mental health professionals disciplined by Utah’s licensing agency. The investigation relied on a large collection of disciplinary reports, covering a wide range of potential violations.

To narrow in on the types of cases we were interested in, we prompted AI to review the documents and identify ones that were related to sexual misconduct. To help the bot do its work, we gave it examples of confirmed cases of sexual misconduct that we were already familiar with and specific keywords to look for. Each result was then reviewed by two reporters, who used licensing records to confirm it was categorized correctly.

In addition, during our reporting on the 2022 school shooting in Uvalde, Texas, ProPublica and The Texas Tribune obtained a trove of unreleased raw materials collected during the state’s investigation. This included hundreds of hours of audio and video recordings, which were difficult to sift through. The footage wasn’t organized or clearly labeled, and some of it was incredibly graphic and disturbing for journalists to watch.

We used self-hosted open-source AI software to securely transcribe and help classify the material, which enabled reporters to match up related files and to reconstruct the day’s events, showing in painstaking detail how law enforcement’s lack of preparation contributed to delays in confronting the shooter.

We know full well that AI does not replicate the very time-intensive work we do. Our journalists write our stories, our newsletters, our headlines and the takeaways at the top of longer stories. We also know that there’s a lot about AI that needs to be investigated, including the companies that market their products, how they train them and the risks they pose.

But to us, there’s also potential to use AI as one of many reporting tools that enables us to examine data creatively and pursue the stories that help you understand the forces shaping our world.

Charles Ornstein is managing editor, local, overseeing ProPublica’s local initiatives. These include offices in the Midwest, South, Southwest and Northwest, a joint initiative with the Texas Tribune, and the Local Reporting Network, which works with local news organizations to produce accountability journalism on issues of importance to their communities.

Agnel Philip, Ken Schwencke, Hannah Fresques and Tyson Evans contributed reporting.

Protect Journalism That Demands Accountability

The story you just read was made possible by our readers. We hope it inspires you to support ProPublica, so we can continue producing investigations that shine a light on power, uncover the truth, and drive real change.

ProPublica is a nonprofit newsroom dedicated to nonpartisan, evidence-based journalism that holds power accountable. Founded in 2008 to address the decline in investigative reporting, we’ve spent over 15 years uncovering injustice, corruption, and abuse of power — work that is slow, expensive and more critical than ever to our democracy. With seven Pulitzer Prizes and reforms sparked in state and local governments, businesses, institutions and more, our reporting ensures that the public interest comes first.

Today, the stakes are higher than ever. From ethics in our government offices, to reproductive health care, to the climate crisis and beyond, ProPublica remains on the front lines of the stories that matter most. Your gift helps us keep the powerful accountable and the truth accessible.

Join over 60,000 supporters nationwide in standing up for investigative journalism that informs, inspires, and creates lasting impact. Thank you for making this work possible.

Spread the word