Imagine it’s the 1950s and you’re in charge of one of the world’s first electronic computers. A company approaches you and says: “We have 10 million words of French text that we’d like to translate into English. We could hire translators, but is there some way your computer could do the translation automatically?”

At this time, computers are still a novelty, and no one has ever done automated translation. But you decide to attempt it. You write a program that examines each sentence and tries to understand the grammatical structure. It looks for verbs, the nouns that go with the verbs, the adjectives modifying nouns, and so on. With the grammatical structure understood, your program converts the sentence structure into English and uses a French-English dictionary to translate individual words.

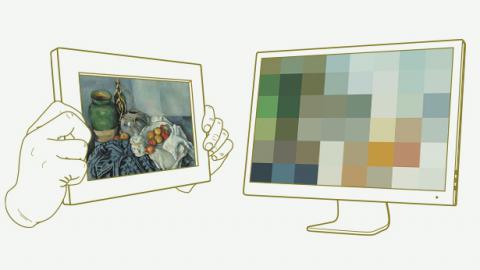

For several decades, most computer translation systems used ideas along these lines — long lists of rules expressing linguistic structure. But in the late 1980s, a team from IBM’s Thomas J. Watson Research Center in Yorktown Heights, N.Y., tried a radically different approach. They threw out almost everything we know about language — all the rules about verb tenses and noun placement — and instead created a statistical model.

Michael Nielsen

They did this in a clever way. They got hold of a copy of the transcripts of the Canadian parliament from a collection known as Hansard. By Canadian law, Hansard is available in both English and French. They then used a computer to compare corresponding English and French text and spot relationships.

For instance, the computer might notice that sentences containing the French word bonjour tend to contain the English word hello in about the same position in the sentence. The computer didn’t know anything about either word — it started without a conventional grammar or dictionary. But it didn’t need those. Instead, it could use pure brute force to spot the correspondence between bonjour and hello.

By making such comparisons, the program built up a statistical model of how French and English sentences correspond. That model matched words and phrases in French to words and phrases in English. More precisely, the computer used Hansard to estimate the probability that an English word or phrase will be in a sentence, given that a particular French word or phrase is in the corresponding translation. It also used Hansard to estimate probabilities for the way words and phrases are shuffled around within translated sentences.

Using this statistical model, the computer could take a new French sentence — one it had never seen before — and figure out the most likely corresponding English sentence. And that would be the program’s translation.

When I first heard about this approach, it sounded ludicrous. This statistical model throws away nearly everything we know about language. There’s no concept of subjects, predicates or objects, none of what we usually think of as the structure of language. And the models don’t try to figure out anything about the meaning (whatever that is) of the sentence either.

Despite all this, the IBM team found this approach worked much better than systems based on sophisticated linguistic concepts. Indeed, their system was so successful that the best modern systems for language translation — systems like Google Translate — are based on similar ideas.

Statistical models are helpful for more than just computer translation. There are many problems involving language for which statistical models work better than those based on traditional linguistic ideas. For example, the best modern computer speech-recognition systems are based on statistical models of human language. And online search engines use statistical models to understand search queries and find the best responses.

Many traditionally trained linguists view these statistical models skeptically. Consider the following comments by the great linguist Noam Chomsky:

There’s a lot of work which tries to do sophisticated statistical analysis, … without any concern for the actual structure of language, as far as I’m aware that only achieves success in a very odd sense of success. … It interprets success as approximating unanalyzed data. … Well that’s a notion of success which is I think novel, I don’t know of anything like it in the history of science.

Chomsky compares the approach to a statistical model of insect behavior. Given enough video of swarming bees, for example, researchers might devise a statistical model that allows them to predict what the bees might do next. But in Chomsky’s opinion it doesn’t impart any true understanding of why the bees dance in the way that they do.

Library of Congress, Geography and Map Division

A map of New York from 1896. The four-color theorem states that any map can be shaded using four colors in such a way that no two adjacent regions have the same color.

Related stories are playing out across science, not just in linguistics. In mathematics, for example, it is becoming more and more common for problems to be settled using computer-generated proofs. An early example occurred in 1976, when Kenneth Appel and Wolfgang Haken proved the four-color theorem, the conjecture that every map can be colored using four colors in such a way that no two adjacent regions have the same color. Their computer proof was greeted with controversy. It was too long for a human being to check, much less understand in detail. Some mathematicians objected that the theorem couldn’t be considered truly proved until there was a proof that human beings could understand.

Today, the proofs of many important theorems have no known human-accessible form. Sometimes the computer is merely doing grunt work — calculations, for example. But as time goes on, computers are making more conceptually significant contributions to proofs. One well-known mathematician, Doron Zeilberger of Rutgers University in New Jersey, has gone so far as to include his computer (which he has named Shalosh B. Ekhad) as a co-author of his research work.

Not all mathematicians are happy about this. In an echo of Chomsky’s doubts, the Fields Medal-winning mathematician Pierre Deligne said: “I don’t believe in a proof done by a computer. In a way, I am very egocentric. I believe in a proof if I understand it, if it’s clear.”

On the surface, statistical translation and computer-assisted proofs seem different. But the two have something important in common. In mathematics, a proof isn’t just a justification for a result. It’s actually a kind of explanation of why a result is true. So computer-assisted proofs are, arguably, computer-generated explanations of mathematical theorems. Similarly, in computer translation the statistical models provide circumstantial explanations of translations. In the simplest case, they tell us that bonjour should be translated as hello because the computer has observed that it has nearly always been translated that way in the past.

Thus, we can view both statistical translation and computer-assisted proofs as instances of a much more general phenomenon: the rise of computer-assisted explanation. Such explanations are becoming increasingly important, not just in linguistics and mathematics, but in nearly all areas of human knowledge.

But as smart skeptics like Chomsky and Deligne (and critics in other fields) have pointed out, these explanations can be unsatisfying. They argue that these computer techniques are not offering us the sort of insight provided by an orthodox approach. In short, they’re not real explanations.

A traditionalist scientist might agree with Chomsky and Deligne and go back to conventional language models or proofs. A pragmatic young scientist, eager to break new ground, might respond: “Who cares, let’s get on with what works,” and continue to pursue computer-assisted work.

Better than either approach is to take both the objections and the computer-assisted explanations seriously. Then we might ask the following: What qualities do traditional explanations have that aren’t currently shared by computer-assisted explanations? And how can we improve computer-assisted explanations so that they have those qualities?

For instance, might it be possible to get the statistical models of language to deduce the existence of verbs and nouns and other parts of speech? That is, perhaps we could actually see verbs as emergent properties of the underlying statistical model. Even better, might such a deduction actually deepen our understanding of existing linguistic categories? For instance, imagine that we discover previously unknown units of language. Or perhaps we might uncover new rules of grammar and broaden our knowledge of linguistics at the conceptual level.

As far as I know, this has not yet happened in the field of linguistics. But analogous discoveries are now being made in other fields. For instance, biologists are increasingly using genomic models and computers to deduce high-level facts about biology. By using computers to compare the genomes of crocodiles, researchers have determined that the Nile crocodile, formerly thought to be a single species, is actually two different species. And in 2010 a new species of human, the Denisovans, was discovered through an analysis of the genome of a finger-bone fragment.

Another interesting avenue is being pursued by Hod Lipson of Columbia University. Lipson and his collaborators have developed algorithms that, when given a raw data set describing observations of a mechanical system, will actually work backward to infer the “laws of nature” underlying those data. In particular, the algorithms can figure out force laws and conserved quantities (like energy or momentum) for the system. The process can provide considerable conceptual insight. So far Lipson has analyzed only simple systems (though complex raw data sets). But it’s a promising case in which we start from a very complex situation, and then use a computer to simplify the description to arrive at a much higher level of understanding.

The examples I’ve given are modest. As yet, we have few powerful techniques for taking a computer-assisted proof or model, extracting the most important ideas, and answering conceptual questions about the proof or model. But computer-assisted explanations are so useful that they’re here to stay. And so we can expect that developing such techniques will be an increasingly important aspect of scientific research over the next few decades.

Correction: This article was revised on July 24, 2015, to reflect that a Denisovan bone fragment was not found in an Alaskan cave.

Spread the word