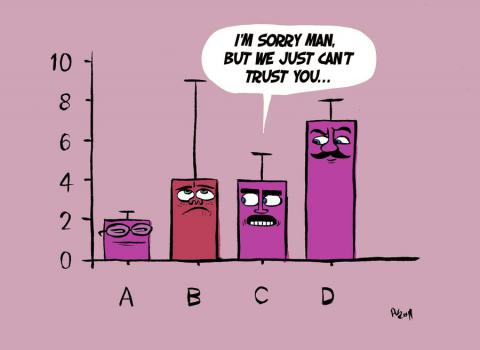

Today, Science published the first results from a massive reproducibility project, in which more than 250 psychology researchers tried to replicate the results of 100 papers published in three psychology journals. Despite working with the original authors and using original materials, only 36% of the studies produced statistically significant results, and more than 80% of the studies reported a stronger effect size in the original study than in the replication. To the authors, however, this is not a sign of failure – rather, it tells us that science is working as it should:

Humans desire certainty, and science infrequently provides it. As much as we might wish it to be otherwise, a single study almost never provides definitive resolution for or against an effect and its explanation. The original studies examined here offered tentative evidence; the replications we conducted offered additional, confirmatory evidence. In some cases, the replications increase confidence in the reliability of the original results; in other cases, the replications suggest that more investigation is needed to establish the validity of the original findings. Scientific progress is a cumulative process of uncertainty reduction that can only succeed if science itself remains the greatest skeptic of its explanatory claims.

We discussed these findings with Jelte M. Wicherts, Associate Professor of Methodology and Statistics at Tilburg University, whose research focuses on this area but was not a co-author on the paper.

-Are you surprised by any of the results?

Overall, I am not surprised by these findings. We already have quite strong indirect and direct evidence for the relatively high publication and reporting biases in psychology (and other fields), implying that many findings in the psychological literature are too good to be true. Given the smallness of most study samples in social and cognitive psychology and the subtlety of most of the effects that we study, we cannot almost always obtain a significant outcome as the literature appears to suggest. There are surveys in which most psychological researchers admit to making opportunistic decisions in collecting and analyzing data, and in presenting their results. Estimates of the number of completed psychological studies being published are lower than 50%. Many meta-analyses show signs of publication bias (small study effects). And there is recent direct evidence showing that researchers report selectively in psychology and related fields. And this collective problem is psychologically and socially understandable given that (at least, researchers think that) journals will publish only significant results.

This new study corroborates that effects reported in the psychological literature are often inflated, and the most likely culprits are publication bias and reporting biases. Some results appear to replicate just fine, while further research will be needed to determine which original findings were false positives (quite likely given these biases) and which effects turn out to be moderated by some aspect of the study. One effect went from strongly positive in the original to strongly negative in the replication.

-It’s easy to get discouraged by these findings and lose faith in research results. How do you respond to that?

Because we as a research group find so many errors in reporting of results and such clear signs of publication bias in the literature, we run the risk of becoming overly negative. This result shows that the field is actually doing pretty well; this study shows that psychology is cleaning up its act. Yes there are problems, but we can address these. Some results appear weaker or more fragile than we expected. Yet many findings appear to be robust even though the original studies were not preregistered and we do not know how many more similar studies were put in the file drawer because their results were less desirable.

-A criticism we’ve heard of replication efforts is that it’s very difficult for a new group of people to gain the skills and tools to do the same study as well as the original authors, so a perfectly valid result may still fail to be replicated. Do you think this study addresses this criticism in any way?

The Open Science Collaborators have installed several checks and balances to tackle this problem. Studies to be replicated were matched with the replicator teams on the basis not only of interests and resources, but also of the teams’ expertise. The open data files clearly indicate the expertise of each replicator team, and the claim that a group of over 250 psychologists lacks expertise in doing these kinds of experiments is a bit of a stretch. Certainly there may be debates about certain specifics of the studies, and I expect the original researchers to point at methodological and theoretical explanations for the supposed discrepancy between the original finding and the replication (Several of the original researchers responded to the final replication report, as can be seen on the project’s OSF page). Such explanations are often ad hoc and typically ignore the role of chance (given the smallness of effects and samples sizes used in most original studies finding a significant result in one study and a non-significant result in another study may well be completely accidental), but they are to be taken seriously and perhaps studied further.

One should always report one’s methods and results in a manner that allows for independent replication; we now have many safe online locations to put supplementary information, materials, and data, and so I hope this project highlights the importance of reporting studies in a much more replicable and reproducible manner.

-Is there anything else about the study you’d like to add?

This project quite clearly shows that findings reported in the top psychology journals should not be taken at face value. The project is also reassuring in the sense that it shows how many psychological researchers are genuinely concerned about true progress in our field. The project is a good example of what open science collaboration can produce; Replication protocols were preregistered. All analyses were double-checked by internal auditors. The materials and data are all open for anyone to disagree with. It cannot get much better than this.

I hope this impressive project will raise awareness among researchers, peer reviewers, and journal editors about the real need to publish all relevant studies, by which I mean those studies that are methodologically and substantively rigorous regardless of their outcome. We need to pre-register our studies more, exercise openness with respect to the data, analyses, and materials (via OSF for instance), and publish results in a more reproducible way. Publication bias and reporting biases will not entirely go away, but at least now we know how to counter them: If we want to make sure our results are directly replicable across different locations, we team up with others and do it all over again.

Like Retraction Watch? Consider making a tax-deductible contribution to support our growth. You can also follow us on Twitter, like us on Facebook, add us to your RSS reader, and sign up on our homepage for an email every time there’s a new post. Click here to review our Comments Policy.

Spread the word