Algorithms of Oppression

Safiya Umoja Noble

New York University Press

ISBN: 9781479837243

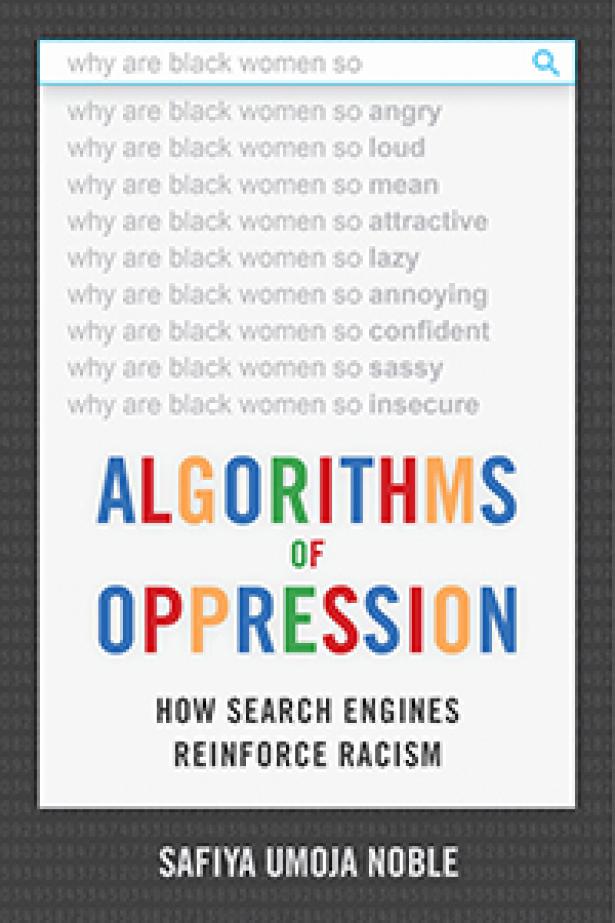

In Algorithms of Oppression, Safiya Umoja Noble clearly explains how search engines, used by billions daily, are not an innocent, neutral vehicle by which to search for information. They are not benign; they are powered by programmers, human beings with their own prejudices and motives, sometimes seeking to foster a certain viewpoint, and sometimes merely reflecting their own, skewed version of reality.

Noble focuses on degrading stereotypes of women of African descent as a prime example of these prejudices, which translate to overt racism. For example, she cites the instance of entering a search for “three black teenagers” in 2010, and getting mug shots as the result. The search “black girls” in that same year brought the viewer to porn sites.

This might indicate that the problem could be easily solved: Programmers who set the various search parameters could be trained in how to leave their own prejudices behind.

Yet the simple explanation of individual prejudices creeping into the programs that power the search is naïve, at best. The purposes are far beyond the control of individuals. As Nobel explains: “They include decision-making protocols that favor corporate elites and the powerful, and they are implicated in global economic and social inequality.”

So as people search for “Michelle Obama” and receive results associating the former First Lady with apes, or see the White House, during President Obama’s term in office, shown as the “Nigga House,” their own perceptions are influenced, and these influences impact their votes, political donations and buying behaviors. Noble states that we must ask “questions about how technological practices are embedded with values, which often obscure the social realities within which representations are formed.”

Studies indicate that Google alone processes over 3.5 billion searches each day. It is a prime source of information for students, teachers, authors, journalists, and many others. The results that are given for any search all help to influence the searcher, and as indicated by the examples included herein, they are often far from accurate, and sometimes only serve to enhance negative stereotypes.

Noble describes entering the term “beautiful,” and shows a screen of pictures of white people. She entered “ugly”, and the results were a racial mix.

This reviewer duplicated the experiment, but added “people” to each search (entering just “beautiful” brought very few pictures of people; the results were sunsets, flowers, etc.) “Beautiful people” resulted in images of mainly white women, with a few white men, and a smattering of women of color. The search “ugly people” resulted in a far more equal number of men, women, whites, and people of color.

Do people naturally think of white women as beautiful, and non-white women as unattractive, or is society being programmed to believe this? If so, what else are the various search engines programming society to believe? Where is the limit to their power?

Things can change. After writing extensively about the racism so prevalent in the results from the Google search “black girls,” Noble reported that, in 2012, two years after she first became aware of the situation, the results from that search were far more reasonable.

When this reviewer did the search, he found that the first page of results, with one exception, showed articles about the challenges black girls face in schools and the larger society. The exception was a dating site called “Black Girls are Easy.” Doing a search on “white girls” brought up a page of sources to obtain a book by that name.

Due at least in part to Noble’s efforts, there is a reduction of overt racism against women of color in Google searches. But her research and efforts expose a much larger problem, of which racism is but one ugly component. Support for imperialism, colonization, wars, and a myriad of other social ills can become accepted, even by those victimized by them, because of careless or contrived search engine results.

Algorithms of Oppression is a wakeup call to bring awareness to the biases of the internet, and should motivate all concerned people to ask why those biases exist, and who they benefit.

Robert Fantina is the author of Empire, Racism and Genocide: A History of U.S. Foreign Policy. His articles on foreign Policy, most frequently concerning Israel and Palestine, have appeared in such venues as Counterpunch and WarIsaCrime.org.

Spread the word