Deep learning is an approach to artificial intelligence that is inspired by the brain’s neural networks. The technique is contributing to a plethora of technologies, from automated video analysis to language translation. In a paper online in Nature, Banino et al.1 use this framework to gain insights into real-life neuronal networks — in particular, how geometrically regular representations of space can facilitate flexible navigation strategies.

Deep-learning networks can be taught how to process inputs to achieve a particular output — for instance, learning to pick out a particular face in many photos of different people. The networks are ‘deep’ in that they are made up of sequential layers of repeated computational units. Each unit receives inputs from similar units in the previous layer and sends outputs to those in the next. Mathematically, such a network can be viewed as a high-dimensional function, which can be modulated by altering how the outputs of one layer are weighted in the next.

The network tunes the function during a training phase, which typically relies on a set of input–output examples. For instance, a deep-learning system might be shown a series of photos, and told which ones contain the face it aims to identify. Its weights are automatically tuned by optimization algorithms until it learns to make a correct identification. The network’s deep organization gives it a prodigious ability to spot and take advantage of the most useful features and patterns that recur across the examples, and distinguish different faces. But one downside is that the final network tends to be a black box — the computational solutions derived during training often cannot be deciphered from the myriad weights assigned throughout the layers.

Deep-learning networks can successfully perform perceptual tasks2, but there have been fewer studies of complex behavioural tasks such as navigation. A key aspect of real-life navigation is estimating one’s position following each step, by calculating the displacement per step on the basis of orientation and distance travelled. This process is called path integration and is thought by neuroscientists, cognitive scientists and roboticists to be crucial for generating a cognitive map of the environment3–5. There are several kinds of neuron associated with the brain’s cognitive maps, including place cells, which fire when the organism occupies a particular position in the environment, and head-direction cells, which signal head orientation.

A third type of neuron, the grid cell, fires when the animal is at any of a set of points that form a hexagonal grid pattern across the environment. Grid cells are thought to endow the cognitive map with geometric properties that help in planning and following trajectories. These cells are found in the brain’s hippocampal formation, a region that, in humans, is involved in spatial learning, autobiographical memories and knowledge of general facts about the world.

Banino and colleagues set out to generate path integration in a deep-learning network. Because path integration involves remembering the output from the previous processing step and using it as input for the next, the authors used a network involving feedback loops. They trained the network using simulations of pathways taken by foraging rodents. The system received information about the simulated rodent’s linear and angular velocity, and about the simulated activity of place and head-direction cells — the latter two acting as an ‘oracle’ for the current location and head direction of the rodent.

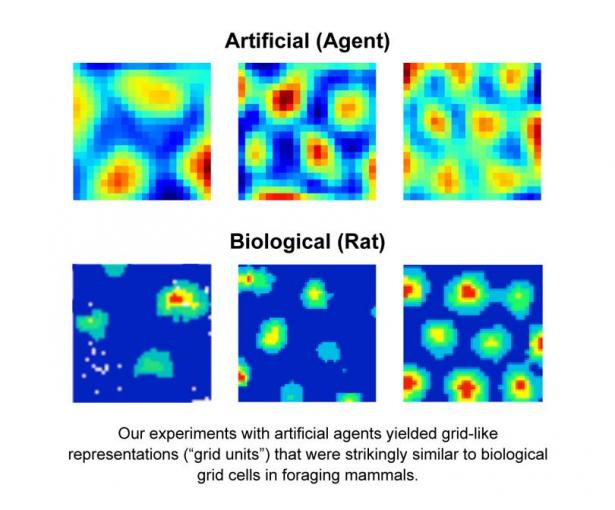

The authors found that patterns of activity resembling grid cells spontaneously emerged in computational units in an intermediate layer of the network during training, even though nothing in the network or the training protocol explicitly imposed this type of pattern. The emergence of grid-like units is an impressive example of deep learning doing what it does best: inventing an original, often unpredicted internal representation to help solve a task.

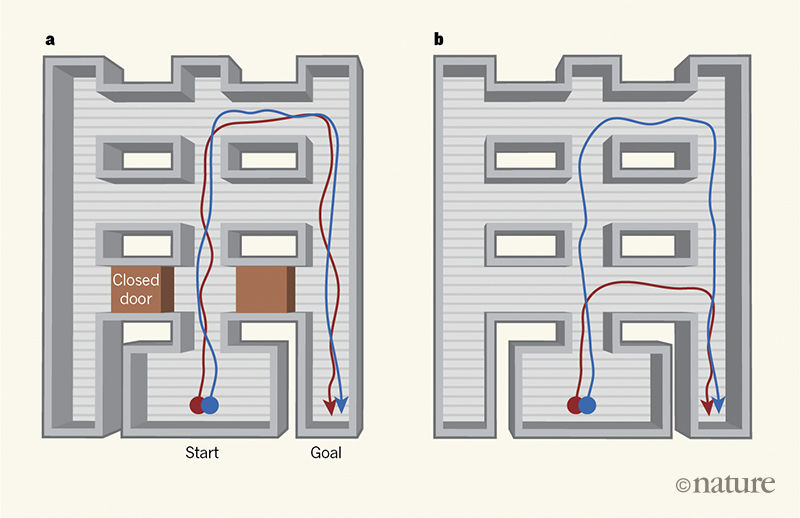

Grid-like units allow the network to keep track of position on the basis of path integration. Can they also help the system to learn to navigate efficiently from its current position to a goal location? To address this question, Banino et al. added a reinforcement-learning component, in which the network learned to assign values to specific actions taken at specific locations. Higher values were assigned to actions that brought the simulated rodent closer to the goal, acting as a reward. The grid-like representation markedly improved the ability of the network to solve goal-directed tasks, compared to control simulations in which the start and goal locations were encoded instead by place and head-direction cells. The trained network found smarter shortcuts when obstacles such as closed doors were removed (Fig. 1), and even extrapolated paths towards goals in a previously unexplored annex of a familiar environment. These results support the idea that grid cells enable the brain to perform vector calculations (calculations about the length and direction of a path) to assist path planning through compartmentalized6or previously unexplored7 environments.

Figure 1 | An AI system learns to take shortcuts. In the mammalian brain, place cells fire when an animal is at a particular position within an environment, head-direction cells fire when the head is in a particular orientation, and grid cells fire when the animal is at points that form a hexagonal grid across the environment. Banino and colleagues1 trained an artificial-intelligence system called a deep-learning network to navigate, by providing it with simulations of rodent foraging patterns, including about the activity of place and head-direction cells. Some computational units in the network developed grid-cell-like firing patterns (not shown). a, While learning to navigate towards a goal, similar paths were taken by both a system using grid cells (red line indicates a sample path) and a system that used place and head-direction cells instead (blue line). b, But when shortcuts were introduced, for example by opening previously closed doors, only the system that used grid cells found the shorter routes, highlighting the ability of grid-cell-like activity to promote flexible navigation strategies. (Figure adapted from Extended Data Fig. 10 in ref. 1.)

In the future, the authors’ network could be used to explore the consequences of interactions between grid and place cells. In the current network, the simulated place layer does not change during training. However, in the brain, grid and place cells influence each other in ways that are not well understood. Although real-life place cells can remain spatially selective in the absence of grid-cell inputs8, these inputs seem important when an animal is far from external landmarks that can be used to define locations9–11. Under these conditions, place cells presumably rely on path integration and grid cells to maintain an accurate estimate of position. By developing the network such that the place-cell layer can be modulated by grid-like inputs, we could begin to unpack this relationship.

From a broader perspective, it is interesting that the network, starting from very general computational assumptions that do not take into account specific biological mechanisms, found a solution to path integration that seems similar to the brain’s. That the network converged on such a solution is compelling evidence that there is something special about grid cells’ activity patterns that supports path integration. The black-box character of deep-learning systems, however, means that it might be hard to determine what that something is.

Likewise, the fact that the grid representation enhanced goal-directed performance is a compelling proof-of-concept of the role of grid cells in the brain. But the authors had to use correlational analyses, guided by qualitative intuitions, to indirectly infer that the network was making vector calculations. The inability to directly manipulate these calculations in the model makes it difficult to examine the computational principles, algorithms and encoding strategies that make grid-cell representations of space such an efficient solution for navigation. As such, the theoretician ends up in the same quandary as the experimentalist: trying to tease apart a poorly understood complex system to understand it. Making deep-learning systems more intelligible to human reasoning is an exciting challenge for the future.

Nature 557, 313-314 (2018)

doi: 10.1038/d41586-018-04992-7

References

- 1.

Banino, A. et al. Nature 557, 429–433 (2018).

- 2.

LeCun, Y., Bengio, Y. & Hinton, G. Nature 521, 436–444 (2015).

- 3.

McNaughton, B. L., Battaglia, F. P., Jensen, O., Moser, E. I. & Moser, M.-B. Nature Rev. Neurosci. 7, 663–678 (2006).

- 4.

Gallistel, C. R. The Organization of Learning (MIT Press, 1990).

-

- 5.

Thrun, S., Burgard, W. & Fox, D. Probabilistic Robotics (MIT Press, 2005).

-

- 6.

Carpenter, F., Manson, D., Jeffery, K., Burgess, N. & Barry, C. Curr. Biol. 25, 1176–1182 (2015).

- 7.

Savelli, F., Luck, J. D. & Knierim, J. J. eLife 6, e21354 (2017).

- 8.

Koenig, J., Linder, A. N., Leutgeb, J. K. & Leutgeb, S. Science 332, 592–595 (2011).

- 9.

Muessig, L., Hauser, J,. Wills, T. J. & Cacucci, F. Neuron 86, 1167–1173 (2015).

- 10.

Wang, Y., Romani, S., Lustig, B., Leonardo, A. & Pastalkova, E. Nature Neurosci. 18, 282–288 (2015).

- 11.

Mallory, C. S., Hardcastle, K., Bant, J. S. & Giocomo, L. M. Nature Neurosci. 21, 270–82 (2018).

Francesco Savelli and James Knierim, are from the Zanvyl Krieger Mind/Brain Institute, at Johns Hopkins University, Baltimore.

This work was done by Andrea Banino, Caswell Barry, Benigno Uria, Charles Blundell, Timothy Lillicrap, Piotr Mirowski, Alexander Pritzel, Martin Chadwick, Thomas Degris, Joseph Modayil, Greg Wayne, Hubert Soyer, Fabio Viola, Brian Zhang, Ross Goroshin, Neil Rabinowitz, Razvan Pascanu, Charlie Beattie, Stig Petersen, Amir Sadik, Stephen Gaffney, Helen King, Koray Kavukcuoglu, Demis Hassabis, Raia Hadsell, and Dharshan Kumaran at Deep Mind.

Spread the word