Life is a rather difficult thing to define, but there are a few aspects that most biologists would agree on: it has to maintain genetic material and be able to make copies of itself. Both of these require energy, so it also must host some sort of minimal metabolism.

In large complex cells, each of these requirements takes hundreds of genes. Even in the simplified genomes of some bacteria, the numbers are still over a hundred. But does this represent the minimum number of genes that life can get away with? About a decade ago, researchers started to develop the technology to synthesize a genome from scratch and then put it in charge of a living cell. Now, five years after their initial successes, researchers used this model to try to figure out the genetic minimum for life itself.

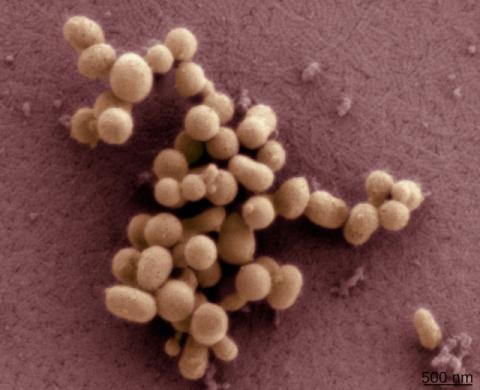

At first, the project seemed to be progressing well. In 2008, the team described the tools it had developed that could build the entire genome of a bacterium. (The team used a parasitic bacteria called Mycoplasma genitalium that started with only 525 genes.) Two years after that, they managed to get a genome synthesized using this method to boot up bacteria, taking the place of the normal genome.

If at first you don't succeed...

After that, however, things went a bit quiet.

A new paper in Science describes why. The team first tried to rationally design what they're calling an HMG, for hypothetical minimal genome. Comparisons of distantly related bacteria suggested that they only had 256 essential genes in common. That figure, however, did not include some essential biological functions, largely because there is more than one way to solve some of life's problems—different bacterial could solve them using different genes. The team tried to identify the genes that could handle some of these other essential functions and add those back into the minimal genome.

It didn't work. There's an entire section in the new paper entitled "Preliminary knowledge-based HMG design does not yield a viable cell."

The authors then turned to empiricism. They performed a heavy mutagenesis of Mycoplasma mycoides, a fast-growing relative of their initial target, and examined anything that grew out. Genes with a mutation in them were considered to be inessential to growth and could be left out of the synthetic genome. The authors engineered a genome with all these genes deleted and then tried to use that to boot up bacteria.

That didn't work either.

It turns out that, even in a small genome like this, essential functions are supplied by more than one gene. If you delete one of the genes, the organism can grow just fine. But if you delete both of the genes, it's fatal. The authors tested this by swapping around pieces of their compact, synthetic genome and merging them with the normal, full-sized genome. This gave them some sense of where some of the overlapping essential genes might be located.

By this point, it was clear they would need to do an iterative process: design, synthesize, test, and learn from repeated failures. And their existing setup simply wasn't fast enough to handle this. The researchers performed a major process optimization and got what initially took years—the full synthesis and transplantation of a synthetic genome—down to three weeks. And they performed another, more extensive round of mutagenesis to identify inessential genes.

The incredible shrinking genome

With the new system in place, the team started deleting genes, and it paid careful attention to which deletions were fatal in combination and when a mutation significantly slowed down growth. After repeated rounds, they came up with a genome they call JCVI-syn2.0. Starting from 525 genes, this was the first reduced, functional genome, with 478 protein-coding genes and another 38 genes for essential RNAs. It wasn't a huge drop, but it showed them they were on the right track.

This 2.0 version was then subjected to another round of mutagenesis, and the results were used to design JCVI-syn3.0. This gets things down to a total of 473 genes. There are a few genes that can tolerate mutations left in this minimal genome; deletion of those genes is expected to slow the growth of the cell down a bit further. The authors declared that version 3.0 represents "a working approximation to a minimal cell."

What's in a minimal cell? The authors classified the remaining genes according to their functions. In the biggest category, 195 genes encoded proteins were involved in turning the genetic information in the genome into proteins. They transcribe the genome's DNA into messenger RNAs or translate those RNAs into proteins. Another 34 gene products were used to maintain and duplicate the genome itself. Combined, these accounted for nearly half the genome.

Another 18 percent of it were genes for proteins that sit in the cell membrane. These are rather critical, because the team grows these bacteria in a rich, nutritious medium. This means the bacteria don't have to make a lot of key biochemicals themselves, but it does mean these need to be brought into the cell. These membrane proteins handle that transport. Another 17 percent of the genes handle the metabolism inside the cell.

That leaves a full 17 percent of the genes where we have no clear idea of what they do. A few of these look like things we've seen before; they encode proteins that sit in the cell membrane and act as a transporter, for example. But we have no idea what they transport. Most of them (65 genes), however, have no identified function whatsoever. Of course, a significantly minimized cell might be the ideal place to find out what that function is.

The authors don't report those experiments here. But they do provide some indication of the work they're doing. For example, they substitute a few changes into an essential gene (one for a ribosomal RNA) and test whether the cells can survive. And they shift things around so that, in part of the genome, all the genes involved in a specific process are clustered together. This might make it relatively simple to swap out the cell's entire DNA metabolism, for example, and replace it with a different version.

The thing that interests me most, however, is not any sort of carefully engineered project. Instead, it would be great to grow up a population of these cells and gradually shift them to less nutritionally generous conditions. In essence, it could be a bit like starting with an early cell and seeing how more complex life evolves this time around. Decades from now, it could tell us a lot about how much of what we think of as being typical of life is a clear optimization, and how much is simply the result of lucky accidents.

Science, 2015. DOI: 10.1126/science.aad6253 (About DOIs).

John Timmer became Ars Technica's science editor in 2007 after spending 15 years doing biology research at places like Berkeley and Cornell.

Spread the word